Kubernetes - CNI

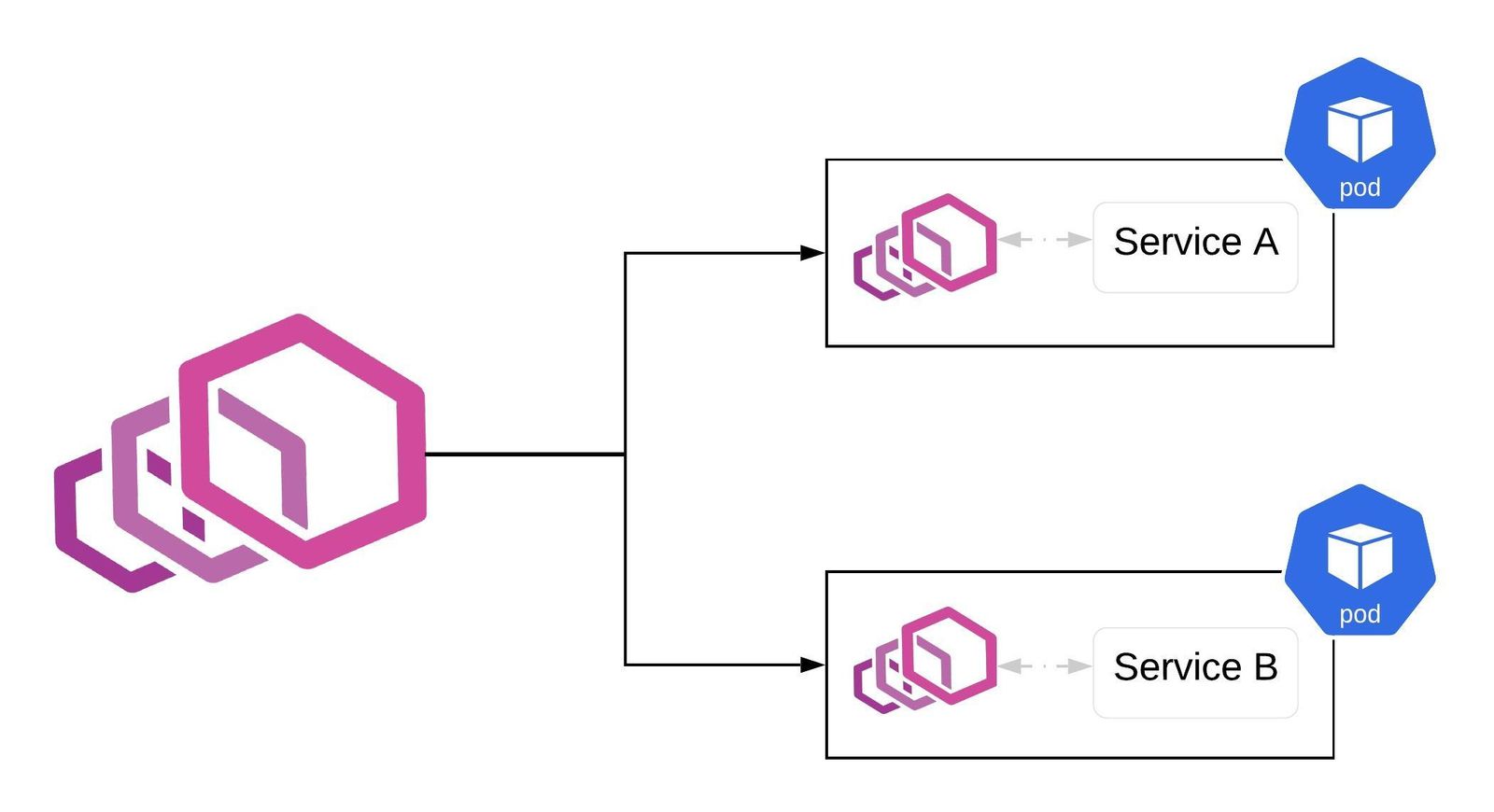

网络分类

| Type | Desc |

|---|---|

CNI |

Pod 到 Pod 的网络,Node 到 Pod 的网络 |

kube-proxy |

通过 Service 访问 |

Ingress |

入站流量 |

基础原则

- 所有

Pod能够不通过NAT就能互相访问 - 所有

Node能够不通过NAT就能互相访问 - 容器内看到的 IP 地址和外部组件看到的

容器 IP是一样的

补充说明

- 在 Kubernetes 集群,

IP 地址是以Pod为单位进行分配的,每个 Pod 拥有一个独立的 IP 地址 - 一个 Pod 内部的

所有容器共享一个网络栈,即宿主上的一个Network Namespace - Pod 内的

所有容器能够通过localhost:port来连接对方 - 在 Kubernetes 中,提供一个

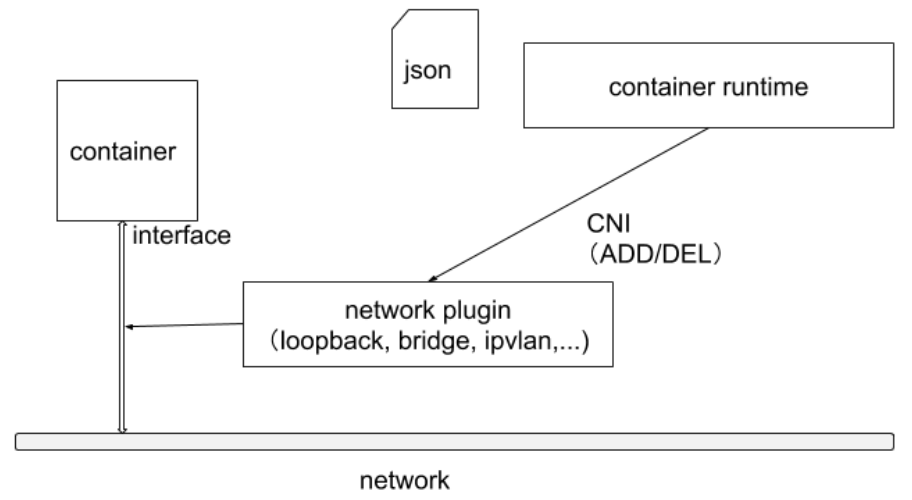

轻量的通用容器网络接口CNIContainer Network Interface,用来设置和删除容器的网络连通性

Container Runtime通过CNI调用网络插件来完成容器的网络设置

插件分类

下面的 Plugin 均由

ContainerNetworking组维护

IPAM -> Main -> Meta

IPAM- IP address allocation - 解决IP 分配的问题

| Plugin | Value |

|---|---|

dhcp |

Runs a daemon on the host to make DHCP requests on behalf of the container |

host-local |

Maintains a local database of allocated IPs |

static |

Allocate a single static IPv4/IPv6 address to container |

Main- interface-creating - 解决网络互通的问题

容器和主机的互通同一主机的容器互通跨主机的容器互通 -CNI在原先容器网络的基础上的新增能力

| Plugin | Desc |

|---|---|

bridge |

Creates a bridge, adds the host and the container to it |

ipvlan |

Adds an ipvlan interface in the container |

loopback |

Set the state of loopback interface to up |

macvlan |

Creates a new MAC address, forwards all traffic to that to the container |

ptp |

Creates a veth pair |

vlan |

Allocates a vlan device |

host-device |

Move an already-existing device into a container |

dummy |

Creates a new Dummy device in the container |

Meta - other plugins

| Plugin | Desc |

|---|---|

tuning |

Tweaks sysctl parameters of an existing interface |

portmap |

An iptables-based portmapping plugin. Maps ports from the host’s address space to the container |

bandwidth |

Allows bandwidth-limiting through use of traffic control tbf (ingress/egress) |

sbr |

A plugin that configures source based routing for an interface (from which it is chained) |

firewall |

A firewall plugin which uses iptables or firewalld to add rules to allow traffic to/from the container |

运行机制

Container Runtime在启动时从CNI的配置目录读取JSON格式的配置文件(多个文件,按字典序选择第 1 个)

1 CNI + N CSI

关于容器网络管理,

Container Runtime一般需要配置两个参数:--cni-bin-dir和--cni-conf-dir

特殊情况:使用

kubelet内置的Dockershim作为Container Runtime时

由kubelet来查找CNI插件,运行插件为容器设置网络,因此这两个参数应该设置为kubelet

| Key | Value | Parameters |

|---|---|---|

| 配置文件 | /etc/cni/net.d |

--cni-conf-dir |

| 可执行文件 | /opt/cni/bin |

--cni-bin-dir |

配置文件

1 | $ ll /etc/cni/net.d |

ipam- calico-ipammain- calicometa- bandwidth + portmap

1 | { |

编译好的

可执行文件 - Kubernetes调用 CNI 插件,本质上是运行本地的可执行文件

1 | $ ll /opt/cni/bin |

设计考量

- Container Runtime 必须在

调用任何 CNI 插件之前为容器创建一个新的Network NamespaceNetwork Namespace挂在SandBox- Container Runtime 执行

RunPodSandBox后,才去调用 CNI 插件

- Container Runtime 必须要决定

容器属于哪些网络,针对每个网络,哪些插件必须要执行 - Container Runtime 必须加载

配置文件,并确定设置网络时哪些插件必须被执行 - 网络配置采用

JSON格式 - Container Runtime 必须按

顺序执行配置文件里相应的插件 - 在完成

容器生命周期后,Container Runtime 必须按照与执行添加容器相反的顺序执行CNI 插件- 为了将容器与网络

断开连接

- 为了将容器与网络

- Container Runtime 被

同一容器调用时不能并行操作,但被不同容器调用时,允许并行操作 - Container Runtime 针对

一个容器必须按顺序执行ADD和DEL操作- ADD 对应 Container Runtime 的

SetUpPodNetwork,CNI 的AddNetwork - ADD 后总是跟着相应的 DEL

- DEL 可能跟着额外的 DEL,CNI 插件应该允许处理多个 DEL

- ADD 对应 Container Runtime 的

容器必须由ContainerID来唯一标识- 需要

存储状态的插件需要使用网络名称、容器 ID、网络接口组成的主 Key来索引

- 需要

- Container Runtime 针对

同一网络、同一容器、同一网络接口,不能连续调用两次ADD 命令1 ADD + N DEL

Container Runtime 通过

环境变量的方式,将参数传递给 calico 的二进制可执行文件

主机网络

- Kubernetes 需要

标准的 CNI 插件,最低版本为 0.2.0 - 网络插件除了支持设置和清理

Pod的网络接口外,还需要支持iptables- 如果

kube-proxy工作在iptables模式,网络插件需要确保容器流量能使用iptables转发 - 如果网络插件将容器连接到

Linux 网桥,需要将net.bridge.bridge-nf-call-iptables设置为 1- Linux 网桥上的数据包将

遍历 iptables 规则

- Linux 网桥上的数据包将

- 如果网络插件不使用 Linux 网桥,应确保容器流量被正确设置了

路由

- 如果

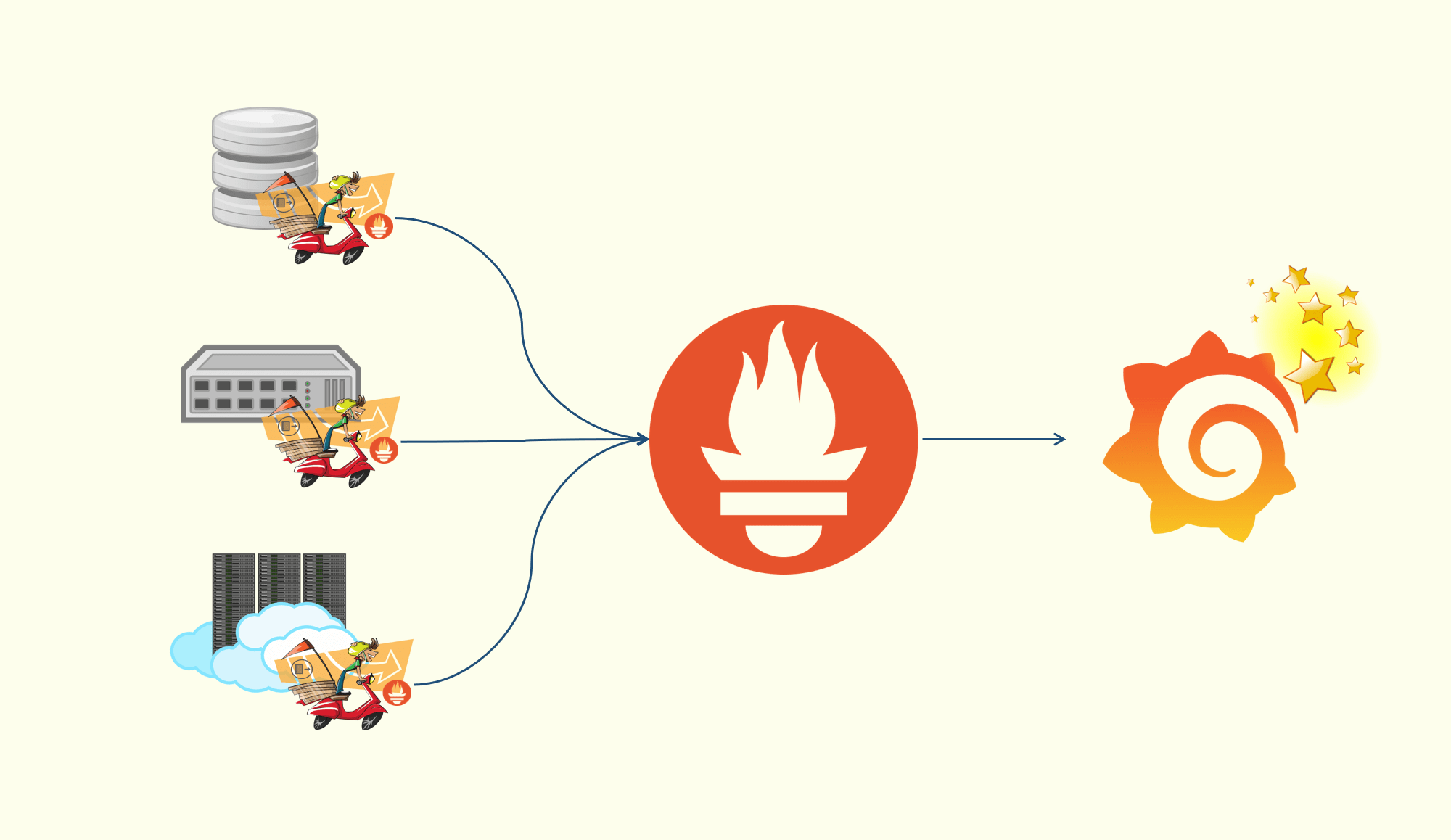

数据链路

- Container Runtime 调用

CNI 插件配置网络(chain: ipam -> main -> meta) - Container Runtime 将配置结果返回给

kubelet kubelet将相关信息上报给API Server,此时通过get po -owide就能查看到对应的Pod IP信息

跨主机互通

跨主机互通的 2 种方式

Tunnel 模式- 封包解包:

IPinIP(IP +IP) /VXLAN(IP +UDP)

- 封包解包:

动态路由- Node 之间

交换路由信息,最终 Node A 会知道 Node B 的路由信息,相当于有一个全景地图

- Node 之间

Plugin

Flannel / Weave / Calico / Cilium,其中

Calico和Cilium是完整的解决方案

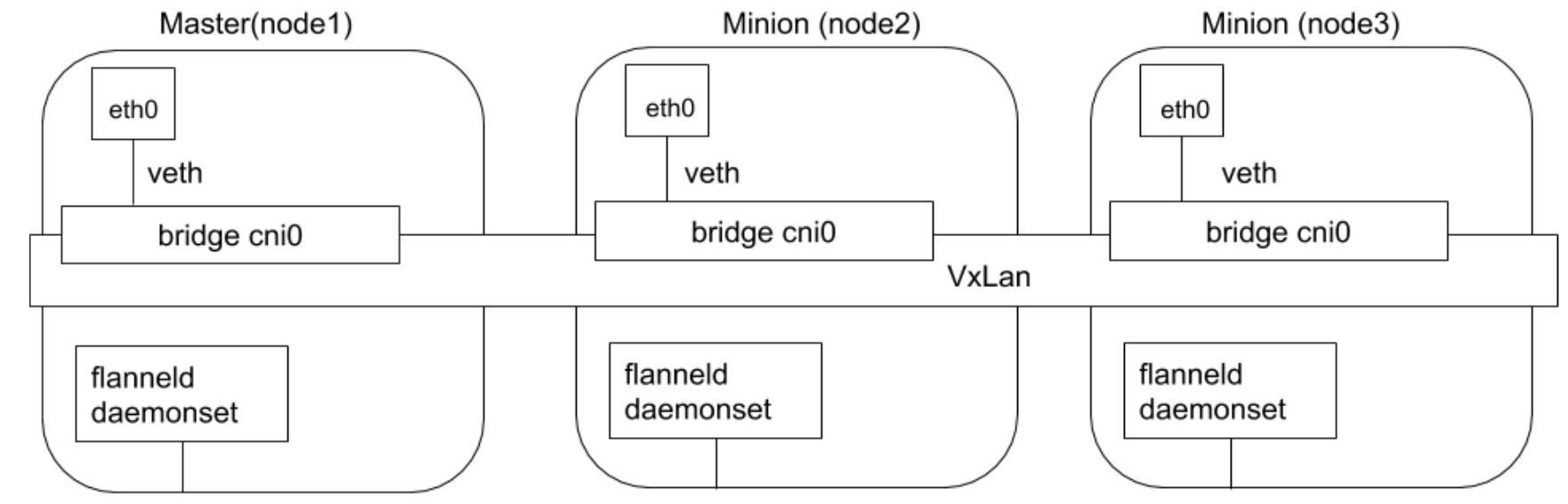

Flannel

基于

Tunnel实现,封包解包的性能开销大致为10%

Flannel 本身

没有完备的生态(如对NetworkPolicy的支持)

- Flannel 由 CoreOS 开发,是 CNI 插件的早期入门产品,

简单易用 - Flannel 使用 Kubernetes 集群

现有的 etcd 集群来存储其状态信息- 不需要提供专用的数据存储,只需要在每个

Node上运行flanneld守护进程(DaemonSet)

- 不需要提供专用的数据存储,只需要在每个

- 每个

Node都被分配一个子网,为该 Node 上的Pod分配 IP 地址 同一 Node内的 Pod 可以使用网桥进行通信- 而

不同 Node上的 Pod 将通过flanneld将其流量封装在UDP包中并路由到目的地 -VXLAN

- 而

- 封装方式使用

VXLAN- 优点 -

性能良好 - 缺点 -

流量难以跟踪

- 优点 -

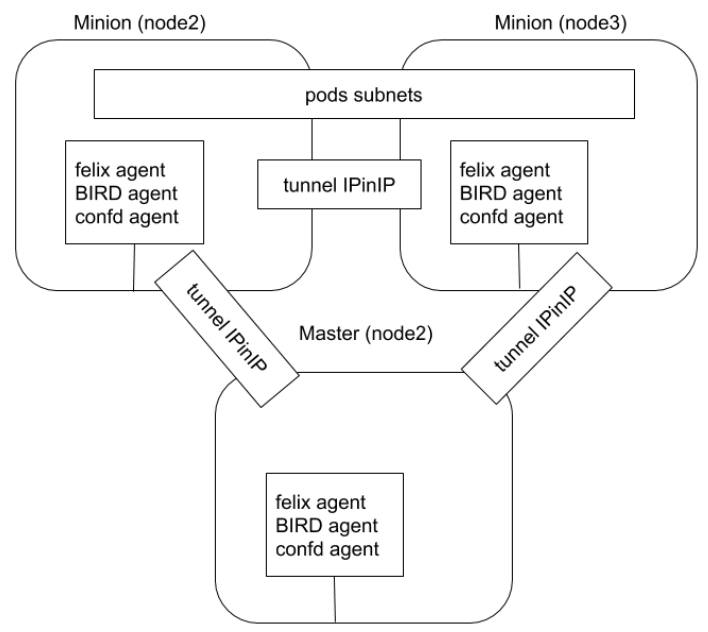

Calico

概述

性能更佳 +功能更全(网络策略)

- 对于

同网段通信,基于3 层,Calico 使用BGP 路由协议在Node之间路由数据包BGP- 数据包在 Node 之间移动不需要包装在额外的封装层

- 对于

跨网段通信,基于IPinIP使用虚拟网卡设备tunl0- 用一个 IP 数据包封装另一个 IP 数据包

外层 IP 数据包头的源地址为隧道入口设备的 IP 地址,目标地址为隧道出口设备的 IP 地址

网络策略- Calico 最受欢迎的功能之一- 使用

ACLs和kube-proxy来创建iptables过滤规则,从而实现隔离容器网络的目的

- 使用

- Calico 可以与

Istio集成- 在

Service Mesh层和网络基础结构层上解释和实施集群中工作负载的策略 - 即:可以配置

功能强大的规则来描述Pod应该如何发送和接收流量,提高安全性和加强对网络环境的控制

- 在

- Calico 是

完全分布式的横向扩展结构,可以快速和平稳地扩展部署规模

社区提供的是

通用方案,Pod 网络不强绑定底层基础网络,在底层基础网络默认是没法为 Pod 网络路由

felix agent - 配置网络

BIRDagent -BGPDaemon

初始化

CNI 二进制文件和配置文件是

由 initContainer 从容器拷贝到主机上

1 | $ k get ds -A |

DaemonSet -

k get ds -n calico-system calico-node -oyaml

CALICO_NETWORKING_BACKEND- 当前运行在bird模式

The BIRD project aims to develop a fully functional

dynamic IP routingdaemon primarily targeted on (but not limited to) Linux, FreeBSD and other UNIX-like systems and distributed under the GNU General Public License.

BIRD是一个开源的BGP Deamon,不同的 IDC 之间可以通过 BGP交换路由信息

1 | apiVersion: apps/v1 |

initContainers - 将

Calico的文件(10-calico.conflist)拷贝到主机上的 CNI 目录(/etc/cni/net.d)

然后 Container Runtime 就能读到 CNI 插件对应的文件

即 CRI 使用 CNI 的可执行文件和配置文件,那 CNI 的某个 initContainer 先将这些文件拷贝到主机上

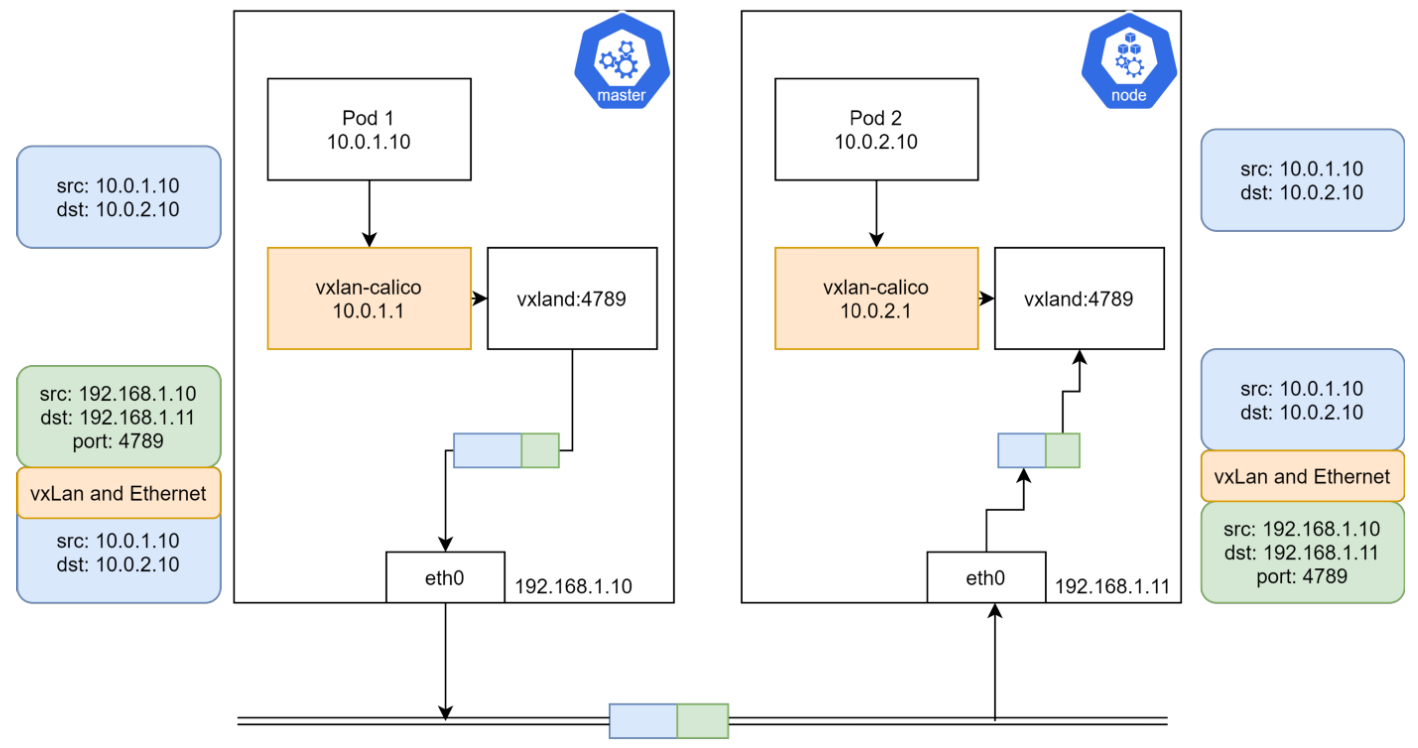

VXLAN

封包解包

类似与 Flannel 的

flannelId

1 | $ ip a |

CRD

1 | $ k get crd |

IPPool

定义一个集群的预定义 IP 段

1 | $ k get ippools.projectcalico.org |

ipipMode: Never- 没有开启IPinIP

blockSize: 26- 每个Node可以分配多大的 IP 段2^(26-16) ≈ 1024个Node,每个 Node 上可分配2^(32 - 26) ≈ 64个IP

1 | apiVersion: projectcalico.org/v3 |

IPAMBlock

定义每个 Node 预分配的 IP 段

1 | $ k get ipamblocks.crd.projectcalico.org |

1 | apiVersion: crd.projectcalico.org/v1 |

IPAMHandle

记录 IP 分配的具体细节

1 | $ k run --image=nginx nginx |

1 | apiVersion: crd.projectcalico.org/v1 |

新增记录

1 | apiVersion: v1 |

网络连通

1 | apiVersion: apps/v1 |

1 | $ k apply -f centos.yaml |

容器内执行,数据包会到 Mac 地址

EE:EE:EE:EE:EE:EE

1 | $ k exec -it centos-67dd9d8678-bxxdv -- bash |

宿主上 veth 对应的 Mac 地址也为

ee:ee:ee:ee:ee:ee

宿主上发现

192.168.185.16对应的是本机上的calic440f455693veth

1 | $ ip a |

进入另一个 Pod 确认

1 | $ sudo crictl ps |

sudo crictl inspect 7d6ad449ae76b

1 | { |

1 | $ sudo nsenter -t 106728 -n ip a |

跨主机路由

IDC -> Node

- 每个

Node会运行一个birdDeamon - 不同的 Node 上的 bird 会保持一个

长连接,然后交换路由信息(将 Node 模拟成一个路由器)

对比

生产:

Calico/Cilium

| 网络策略 | IPV6 | 网络层级 | 部署方式 | 命令行 | |

|---|---|---|---|---|---|

| Flannel | N | N | L2 - VXLAN |

DaemonSet |

|

| Weave | Y | Y | L2 - VXLAN | DaemonSet | |

Calico |

Y |

Y | L3 - IPinIP / VXLAN / BGP |

DaemonSet |

calicoctl |

Cilium |

Y | Y | L3/L4 + L7(filtering) | DaemonSet | cilium |