运行时存储

运行时存储:镜像只读层 + 容器读写层(写性能不高)

容器启动后,运行时所需文件系统的性能直接影响容器性能

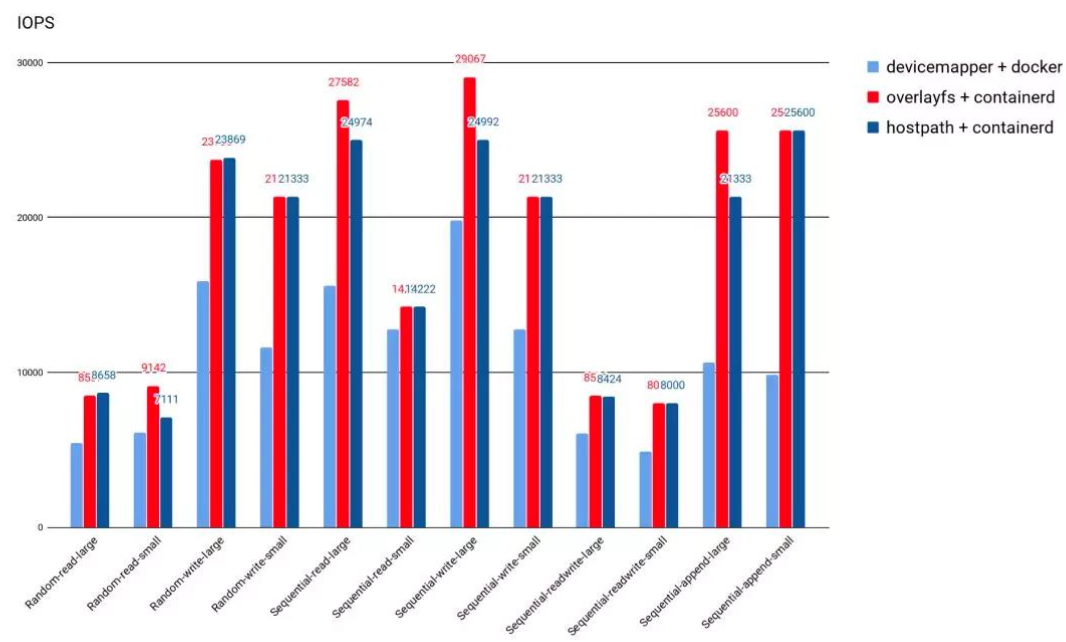

早期 Docker 使用 DeviceMapper 作为容器运行时的存储驱动,因为 OverlayFS 尚未合并进 Linux Kernel

目前 Docker 和 containerd 都默认以 OverlayFS 作为运行时存储驱动

OverlayFS 的性能很好,与操作主机文件的性能几乎一致,比 DeviceMapper 优 20%

CSI 分类

以插件的形式来实现对不同存储的支持和扩展

Plugin

Desc

in-tree

代码耦合在 Kubernetes 中,社区不再接受新的 in-tree 存储插件

out-of-tree - FlexVolume - Native Call

Kubernetes 通过调用 Node 的本地可执行文件与存储插件进行交互root 权限安装插件驱动CNI 非常类似

out-of-tree - CSI - RPC

CSI 通过 RPC 与存储驱动进行交互

插件

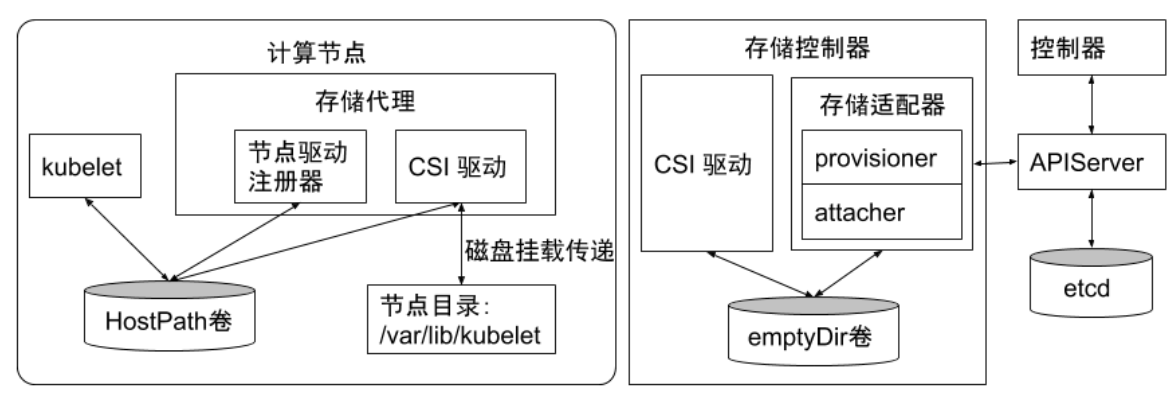

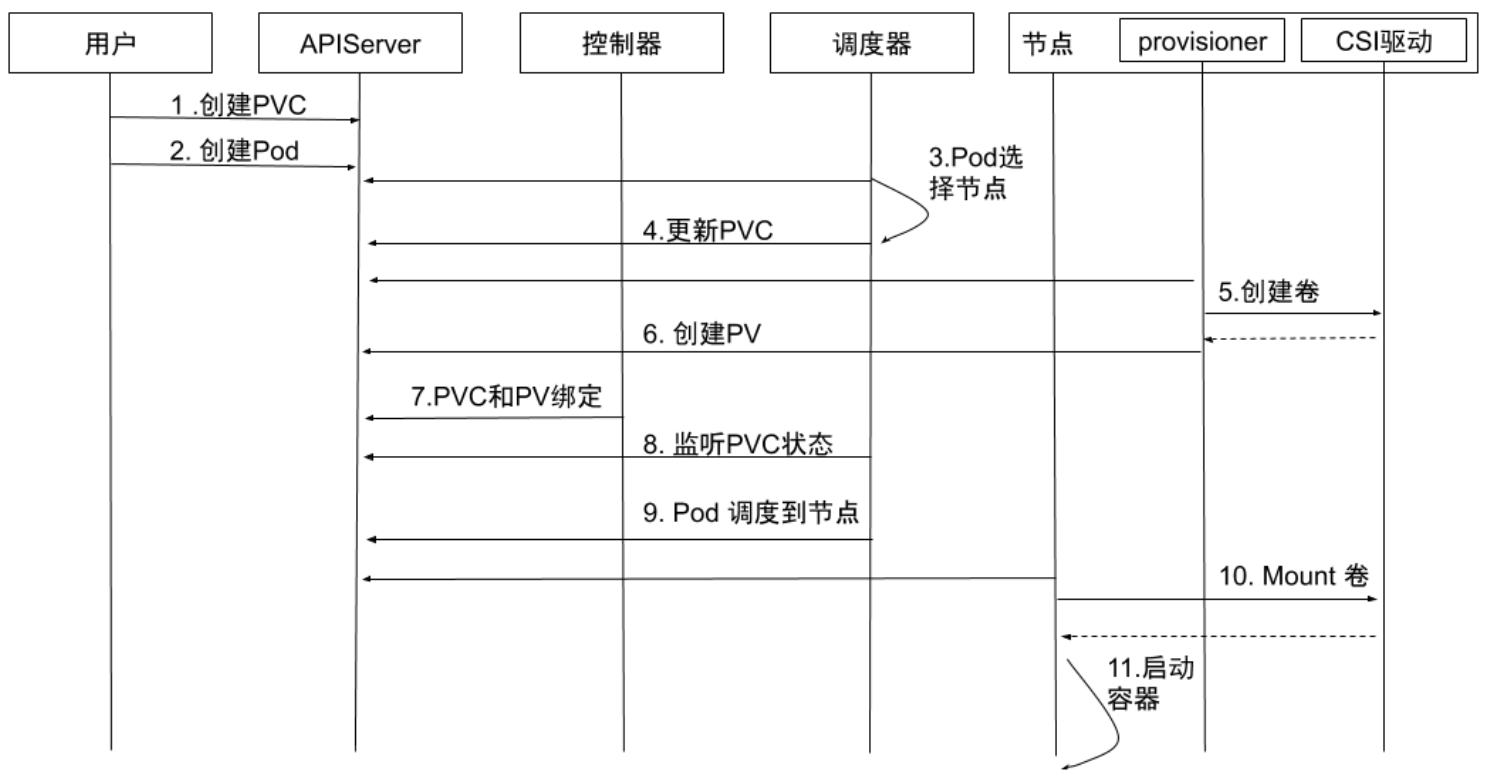

创建存储卷 - kube-controller-manager - provisioner - CSI使用存储卷 - pod on node - kubelet - CSI - attach + mount

kube-controller-manager

kube-controller-manager 模块用于感知 CSI 驱动存在

Kubernetes 的主控模块通过 Unix domain socket(并不是 CSI 驱动)或其它方式进行直接交互

Kubernetes 的主控模块只与 Kubernetes 相关的 API 进行交互

如果 CSI 驱动有依赖 Kubernetes API 的操作

需要在 CSI 驱动里通过 Kubernetes 的 API 来触发相关的 API 操作

kubelet

kubelet 模块用于与 CSI 驱动进行交互

kubelet 通过 Unix domain socket 向 CSI 驱动发起 CSI 调用,再发起 mount 卷和 umount 卷

kubelet 通过插件注册机制发现 CSI 驱动以及用于与 CSI 驱动交互的 Unix domain socket

所有部署在 Kubernetes 集群中的 CSI 驱动都要通过 kubelet 的插件注册机制来注册自己

驱动

external-attacher / external-provisioner / external-resizer / external-snapshotter / node-driver-register / CSI driver

CSI 插件 = 存储控制器 + 存储代理(DaemonSet)

临时存储

常用的临时存储为 emptyDir

容器运行时存储基于 OverlayFS,而写性能非常低,写数据应该要外挂存储,最常用是 host + emptyDir

卷一开始为空

与 Pod 的生命周期紧绑定,当 Pod 从 Node 上删除时,emptyDir 中的数据也会被永久删除

kubelet syncPod 中的 makePodDataDirs

当 Pod 中的容器 原地重启时,emptyDir 的数据并不会丢失

支持本地磁盘、网络存储、内存

emptyDir.medium: Memory - Kubernetes 会为容器安装 tmpfs此时数据存储在内存中

如果 Node 重启,内存数据会被清除

而如果数据存储在磁盘上,Node 重启,数据依然存在

tmpfs 的内存也会计入容器的使用内存总量,受 Cgroups 限制

设计初衷:给应用充当缓存空间,或者存储中间数据,用于快速恢复

emptyDir 的空间位于系统根盘,被所有容器共享,在磁盘使用率较高时会触发 Pod Eviction

emptydir.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx volumeMounts: - mountPath: /cache name: cache-volume volumes: - name: cache-volume emptyDir: {}

1 2 3 4 5 6 7 8 9 10 11 12 13 $ k apply -f emptydir.yaml deployment.apps/nginx-deployment created $ k get po NAME READY STATUS RESTARTS AGE centos-67dd9d8678-bxxdv 1/1 Running 0 3h27m nginx 1/1 Running 0 2d18h nginx-deployment-9b44bf4b5-7h2t4 1/1 Running 0 12s $ sudo crictl ps CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD fa15a037579fc ff78c7a65ec2b About a minute ago Running nginx 0 af4992aeefc57 nginx-deployment-9b44bf4b5-7h2t4 ...

Container - sudo crictl inspect fa15a037579fc

使用的是 Node 上的 ext4 文件系统,并不是容器运行时的存储(OverlayFS)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 { "status" : { "mounts" : [ { "containerPath" : "/cache" , "hostPath" : "/var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume" , "propagation" : "PROPAGATION_PRIVATE" , "readonly" : false , "selinuxRelabel" : false } ] } , "info" : { "config" : { "mounts" : [ { "container_path" : "/cache" , "host_path" : "/var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume" } ] } , "mounts" : [ { "destination" : "/cache" , "type" : "bind" , "source" : "/var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume" , "options" : [ "rbind" , "rprivate" , "rw" ] } ] } }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 $ sudo ls -ld /var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume drwxrwxrwx 2 root root 4096 Jul 29 12:01 /var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume $ df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 5.8G 0 5.8G 0% /dev tmpfs tmpfs 1.2G 4.2M 1.2G 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 30G 14G 15G 49% / tmpfs tmpfs 5.9G 0 5.9G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 5.9G 0 5.9G 0% /sys/fs/cgroup /dev/nvme0n1p2 ext4 2.0G 206M 1.6G 12% /boot /dev/nvme0n1p1 vfat 1.1G 6.4M 1.1G 1% /boot/efi /dev/loop0 squashfs 58M 58M 0 100% /snap/core20/1614 /dev/loop1 squashfs 62M 62M 0 100% /snap/lxd/22761 tmpfs tmpfs 1.2G 0 1.2G 0% /run/user/1000 /dev/loop3 squashfs 47M 47M 0 100% /snap/snapd/19459 /dev/loop4 squashfs 60M 60M 0 100% /snap/core20/1977 /dev/loop5 squashfs 92M 92M 0 100% /snap/lxd/24065 shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/d115ca9d37d81b888613d87c251a5b73715e6e6c87797aa2da640a1568fe7abf/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/d69c146236495ec545f49398a47a96ebd9c3d7a6f27c3232ca22020a23f3566c/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/95fb6fc590c286b0a1dfec49b152927ede0de6267ce495ae6a3f85246e875813/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/1165bab25ae21f44b2e53542ad53ba0fcd09d8cd3926a2e5b06eac9c2aaa58e3/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/bc8c16a440f5c7e02fbdf04084f7084995f95d028fa791f0702d1c14724e4f5b/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/88103cf807ae5cc2639f792c6fa0b2ce2098f2bd3b00db946ad6208069249c81/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/a732a1530dd185a58a84ca866ebc4d082049f5ecfb079f42bfb4e4db0672a7d2/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/ecddecbd8bb0be0bbadc17c37b4ea684badc89bf88daae1920ffbbbd3363ec21/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/7d6e14cf214eb0436a77a72f94030819255bdce7abb177549a078fa436d6e899/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/14994dea79206eef6dca3caf2528e786aed6c882b1e03acdbac1d551c67f8909/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/704b36894705f2aabb5d3802c2071e4ea14cc2963006bd3426f1b7ce6e8b0cde/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/a31bfee878b49c68c28b36ff9fe4a7e5510639337bdff0c830dc5d37f6b87377/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/6cdce974ca5b8f5c2abc51b7cc2880df73192d440d52a964906c5437ca7eabb0/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/cb07c78e242382171ac035d9b888454f71bb393e788f7feaa7ee13d4ead5cb34/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/869afd4133b4f1415f95ab3949e26c0bd92d38034316f257c9d493889370b75b/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/68a89759992ad245f0e50af8aeca3105a709d191f82e6256fe15eec4dd9c580a/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/af4992aeefc579d7286148c31ce9a6d69592d2819c059f63db4273dc25f4a28b/shm $ sudo crictl pods POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME af4992aeefc57 17 minutes ago Ready nginx-deployment-9b44bf4b5-7h2t4 default 0 (default) ... $ ll /run/containerd/io.containerd.grpc.v1.cri/sandboxes/af4992aeefc579d7286148c31ce9a6d69592d2819c059f63db4273dc25f4a28b/shm total 0

删除 Pod

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 $ k delete -f emptydir.yaml deployment.apps "nginx-deployment" deleted $ k get po NAME READY STATUS RESTARTS AGE centos-67dd9d8678-bxxdv 1/1 Running 0 3h48m nginx 1/1 Running 0 2d18h $ df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 5.8G 0 5.8G 0% /dev tmpfs tmpfs 1.2G 4.0M 1.2G 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 30G 14G 15G 49% / tmpfs tmpfs 5.9G 0 5.9G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 5.9G 0 5.9G 0% /sys/fs/cgroup /dev/nvme0n1p2 ext4 2.0G 206M 1.6G 12% /boot /dev/nvme0n1p1 vfat 1.1G 6.4M 1.1G 1% /boot/efi /dev/loop0 squashfs 58M 58M 0 100% /snap/core20/1614 /dev/loop1 squashfs 62M 62M 0 100% /snap/lxd/22761 tmpfs tmpfs 1.2G 0 1.2G 0% /run/user/1000 /dev/loop3 squashfs 47M 47M 0 100% /snap/snapd/19459 /dev/loop4 squashfs 60M 60M 0 100% /snap/core20/1977 /dev/loop5 squashfs 92M 92M 0 100% /snap/lxd/24065 shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/d115ca9d37d81b888613d87c251a5b73715e6e6c87797aa2da640a1568fe7abf/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/d69c146236495ec545f49398a47a96ebd9c3d7a6f27c3232ca22020a23f3566c/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/95fb6fc590c286b0a1dfec49b152927ede0de6267ce495ae6a3f85246e875813/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/1165bab25ae21f44b2e53542ad53ba0fcd09d8cd3926a2e5b06eac9c2aaa58e3/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/bc8c16a440f5c7e02fbdf04084f7084995f95d028fa791f0702d1c14724e4f5b/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/88103cf807ae5cc2639f792c6fa0b2ce2098f2bd3b00db946ad6208069249c81/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/a732a1530dd185a58a84ca866ebc4d082049f5ecfb079f42bfb4e4db0672a7d2/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/ecddecbd8bb0be0bbadc17c37b4ea684badc89bf88daae1920ffbbbd3363ec21/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/7d6e14cf214eb0436a77a72f94030819255bdce7abb177549a078fa436d6e899/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/14994dea79206eef6dca3caf2528e786aed6c882b1e03acdbac1d551c67f8909/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/704b36894705f2aabb5d3802c2071e4ea14cc2963006bd3426f1b7ce6e8b0cde/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/a31bfee878b49c68c28b36ff9fe4a7e5510639337bdff0c830dc5d37f6b87377/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/6cdce974ca5b8f5c2abc51b7cc2880df73192d440d52a964906c5437ca7eabb0/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/cb07c78e242382171ac035d9b888454f71bb393e788f7feaa7ee13d4ead5cb34/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/869afd4133b4f1415f95ab3949e26c0bd92d38034316f257c9d493889370b75b/shm shm tmpfs 64M 0 64M 0% /run/containerd/io.containerd.grpc.v1.cri/sandboxes/68a89759992ad245f0e50af8aeca3105a709d191f82e6256fe15eec4dd9c580a/shm $ sudo ls -ld /var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume ls: cannot access '/var/lib/kubelet/pods/8af6e32d-537e-4760-b654-bf1dd416cf7b/volumes/kubernetes.io~empty-dir/cache-volume': No such file or directory

半持久化存储

常用的半持久化存储为 hostPath,一般不推荐使用

hostPath 将 Node 文件系统上的文件或者目录挂载到指定的 Pod 中

场景:获取 Node 的系统信息

日志采集 - /var/log/pods

/proc

支持类型:目录、字符设备、块设备

几点注意

使用同一目录的 Pod 可能会调度到不同的 Node,目录中的内容可能是不同的

Kubernetes 在调度时无法顾及 hostPath 使用的资源

Pod 被删除后,hostPath 上的数据一般会遗留在 Node 上,占用磁盘空间

PV

pv.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: v1 kind: PersistentVolume metadata: name: task-pv-volume labels: type: local spec: storageClassName: manual capacity: storage: 100Mi accessModes: - ReadWriteOnce hostPath: path: "/mnt/data"

PV 一般由集群管理员创建

1 2 3 4 5 6 7 8 9 10 11 12 13 $ sudo mkdir /mnt/data $ sudo sh -c "echo 'Hello from Kubernetes storage' > /mnt/data/index.html" $ k get pv No resources found $ k apply -f pv.yaml persistentvolume/task-pv-volume created $ k get pv -owide NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE task-pv-volume 100Mi RWO Retain Available manual 20s Filesystem

PVC - 用户申请

pvc.yaml 1 2 3 4 5 6 7 8 9 10 11 apiVersion: v1 kind: PersistentVolumeClaim metadata: name: task-pv-claim spec: storageClassName: manual accessModes: - ReadWriteOnce resources: requests: storage: 100Mi

1 2 3 4 5 6 7 8 9 10 $ k apply -f pvc.yaml persistentvolumeclaim/task-pv-claim created $ k get pvc -owide NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE task-pv-claim Bound task-pv-volume 100Mi RWO manual 2m40s Filesystem $ k get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE task-pv-volume 100Mi RWO Retain Bound default/task-pv-claim manual 3m32s

Pod - 从 PVC 挂载卷

pod.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: v1 kind: Pod metadata: name: task-pv-pod spec: volumes: - name: task-pv-storage persistentVolumeClaim: claimName: task-pv-claim containers: - name: task-pv-container image: nginx ports: - containerPort: 80 name: "http-server" volumeMounts: - mountPath: "/usr/share/nginx/html" name: task-pv-storage

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ k apply -f pod.yaml pod/task-pv-pod created $ k get po -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES centos-67dd9d8678-bxxdv 1/1 Running 0 4h13m 192.168.185.20 mac-k8s <none> <none> nginx 1/1 Running 0 2d19h 192.168.185.16 mac-k8s <none> <none> task-pv-pod 1/1 Running 0 56s 192.168.185.22 mac-k8s <none> <none> $ ip r default via 192.168.191.2 dev ens160 proto dhcp src 192.168.191.153 metric 100 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown blackhole 192.168.185.0/26 proto 80 192.168.185.8 dev calia19a798b8fd scope link 192.168.185.9 dev calice2f12042d3 scope link 192.168.185.10 dev calic53d7952249 scope link 192.168.185.11 dev calicb368f46996 scope link 192.168.185.12 dev cali07e441e0d79 scope link 192.168.185.13 dev cali9ecb9fbd866 scope link 192.168.185.16 dev calic440f455693 scope link 192.168.185.20 dev calica4914fdab0 scope link 192.168.185.22 dev calie85a22d69f1 scope link 192.168.191.0/24 dev ens160 proto kernel scope link src 192.168.191.153 192.168.191.2 dev ens160 proto dhcp scope link src 192.168.191.153 metric 100 $ curl 192.168.185.22 Hello from Kubernetes storage

修改 hostPath 里面的资源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 $ k exec -it task-pv-pod -- bash root@task-pv-pod:/# echo 'hello go' > /usr/share/nginx/html/index.html root@task-pv-pod:/# exit $ curl 192.168.185.22 hello go $ cat /mnt/data/index.html hello go $ k delete -f pod.yaml -f pvc.yaml -f pv.yaml pod "task-pv-pod" deleted persistentvolumeclaim "task-pv-claim" deleted persistentvolume "task-pv-volume" deleted $ cat /mnt/data/index.html hello go

持久化存储

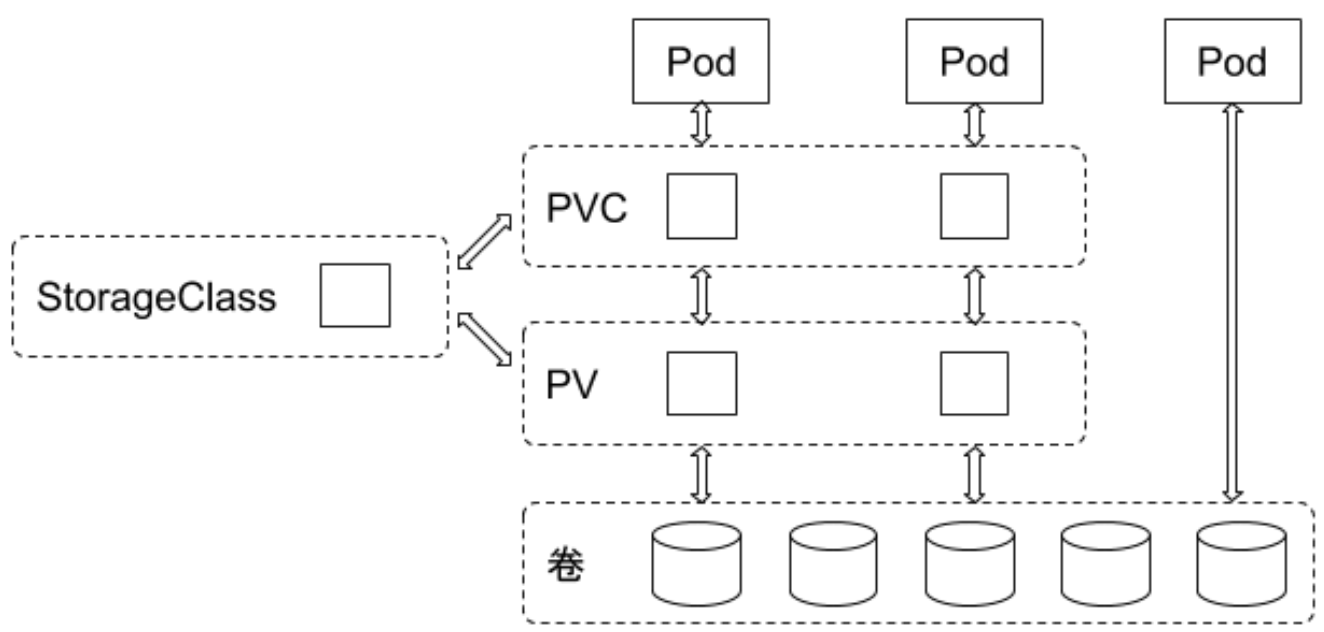

引入了 StorageClass、PersistentVolumeClaim、PersistentVolume存储独立于 Pod 的生命周期来进行管理

支持主流的块存储和文件存储,大体可分为网络存储和本地存储

StorageClass

用于指示存储类型

1 2 3 4 5 6 7 8 9 10 11 $ k api-resources --api-group='storage.k8s.io' NAME SHORTNAMES APIVERSION NAMESPACED KIND csidrivers storage.k8s.io/v1 false CSIDriver csinodes storage.k8s.io/v1 false CSINode csistoragecapacities storage.k8s.io/v1beta1 true CSIStorageCapacity storageclasses sc storage.k8s.io/v1 false StorageClass volumeattachments storage.k8s.io/v1 false VolumeAttachment $ k get sc -A NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE localdev-nfs-common nfs-localdev-nfs-common Retain WaitForFirstConsumer false 26d

localdev-nfs-common 1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: k8s.kuboard.cn/storageType: nfs_client_provisioner creationTimestamp: "2022-07-03T05:56:58Z" name: localdev-nfs-common resourceVersion: "574432" uid: 7915a5fa-606f-4d08-8498-3db67aaf9c61 parameters: archiveOnDelete: "false" provisioner: nfs-localdev-nfs-common reclaimPolicy: Retain volumeBindingMode: WaitForFirstConsumer

1 2 $ get deployments.apps -n kube-system | grep nfs eip-nfs-localdev-nfs-common 1/1 1 1 26d

PersistentVolumeClaim

由用户创建,代表用户对存储需求的声明;存储卷的访问模式必须与存储类型一致

AccessMode

Desc

ReadWriteOnce - RWO该卷只能在1 个 Node 上被 Mount,可读可写

ReadOnlyMany - ROX该卷只能在N 个 Node 上被 Mount,只读

ReadWriteMany - RWX该卷只能在N 个 Node 上被 Mount,可读可写

PersistentVolume

静态 - 由集群管理员提前创建动态 - 根据 PVC 的申请需求动态创建

对象关系

用户创建 PVC 来申请存储控制器通过 PVC 的 StorageClass 和请求大小,到存储后端创建 PV

StorageClass 对应 Provisioner,Provisioner 也是一个 Controller

Pod 通过指定 PVC 来引用存储

最佳实践

本地 = 集群内部

不同介质类型的磁盘,需要设置不同的 StorageClass(需要设置磁盘介质的类型)

在本地存储的 PV 静态部署模式下,每个物理磁盘都尽量只创建一个 PV

如果划分多个分区来提供多个本地存储 PV,会产生 IO 竞争

本地存储需要配合磁盘检测

在集群部署规模化后,每个集群的本地存储 PV 可能会超过几万个,磁盘损坏是频发事件

对于提供本地存储节点的磁盘管理,需要做到灵活管理和自动化

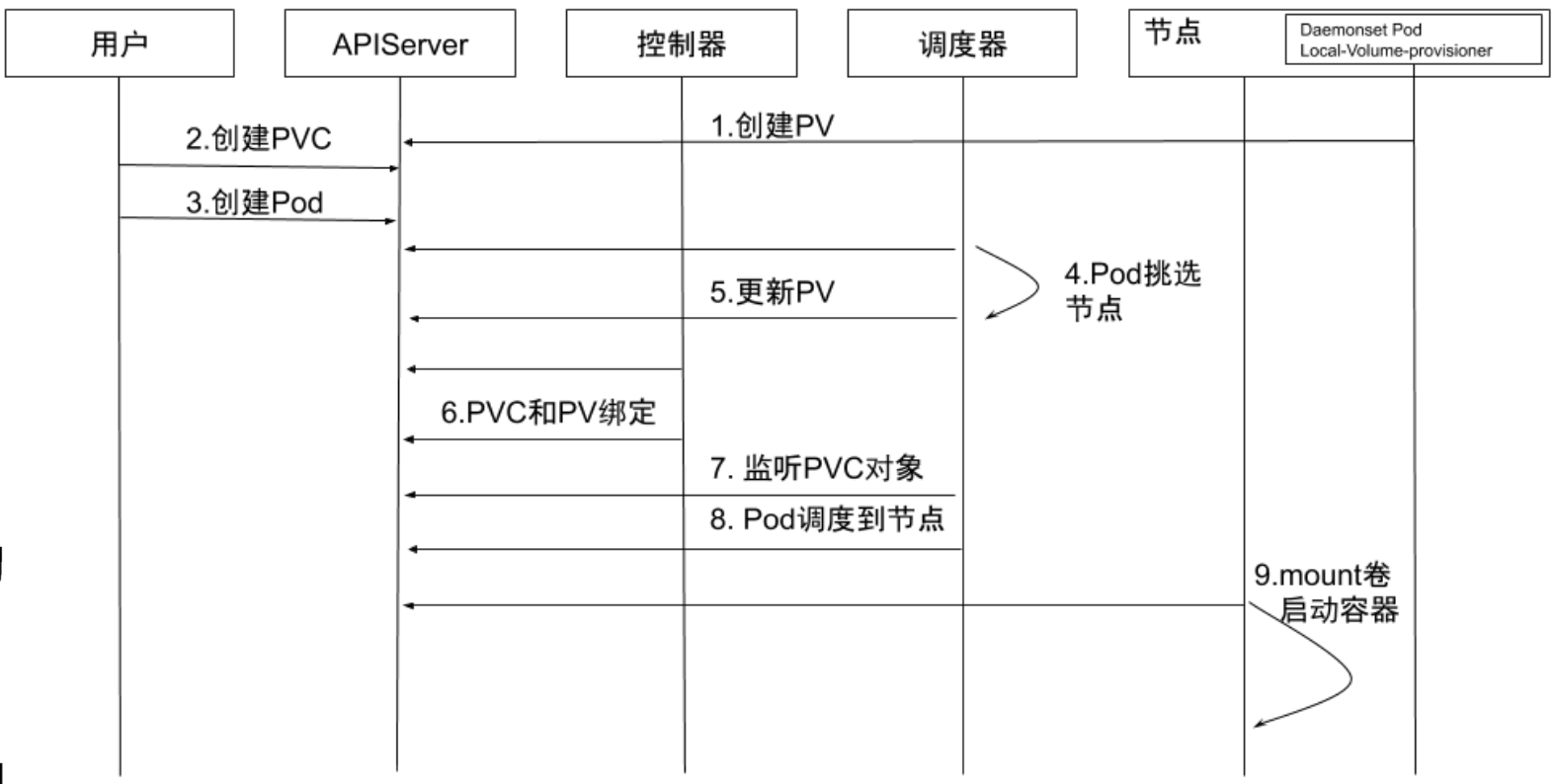

Exclusive / Static

场景:高 IOPS

先创建 PV

Node 上架时携带了硬盘,一旦注册到 Kubernetes,会有 provisioner 扫描 Node 的信息,并自动创建 PV

当用户使用 Pod 时,里面会携带 PVC 的定义

当 Pod 知道要调度到某个 Node 时,会建立对应 PVC 和 PV 的绑定

Shared / Dynamic

场景:需要大空间,借助 LVM,非独占(可能会有 IO 竞争)

先创建 PVC,然后动态创建 PV

CSI 驱动 需要汇报 Node 上相关存储的资源信息,以便于调度不同厂家的机器,汇报方式也不同

如何汇报 Node 的存储信息,以及如何让 Node 的存储信息应用于调度,目前没有统一的方案