Kubernetes - Istio

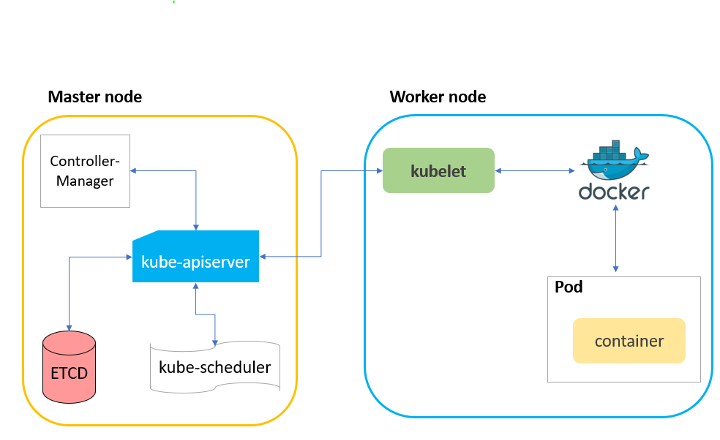

CRD

核心为

networking

1 | $ istioctl version |

Sidecar

创建 namespace

1 | $ k create ns sidecar |

为新建的 namespace 打上 label,该 namespace 下的 Pod 需要自动插入 sidecar

1 | $ k label ns sidecar istio-injection=enabled |

k get mutatingwebhookconfigurations.admissionregistration.k8s.io istio-sidecar-injector -oyaml

监听到Pod的创建事件后,调用istio-system.istiod:443/inject

1 | apiVersion: admissionregistration.k8s.io/v1 |

服务端:Deployment + Service

1 | apiVersion: apps/v1 |

部署服务端,Pod 中注入了 Sidecar,总共 2 个容器

1 | $ k apply -f nginx.yaml -n sidecar |

k get po -n sidecar nginx-deployment-7fc7f5758-46mzh -oyaml

istio/proxyv2本质上是一个EnvoySidecar- 还插入了一个

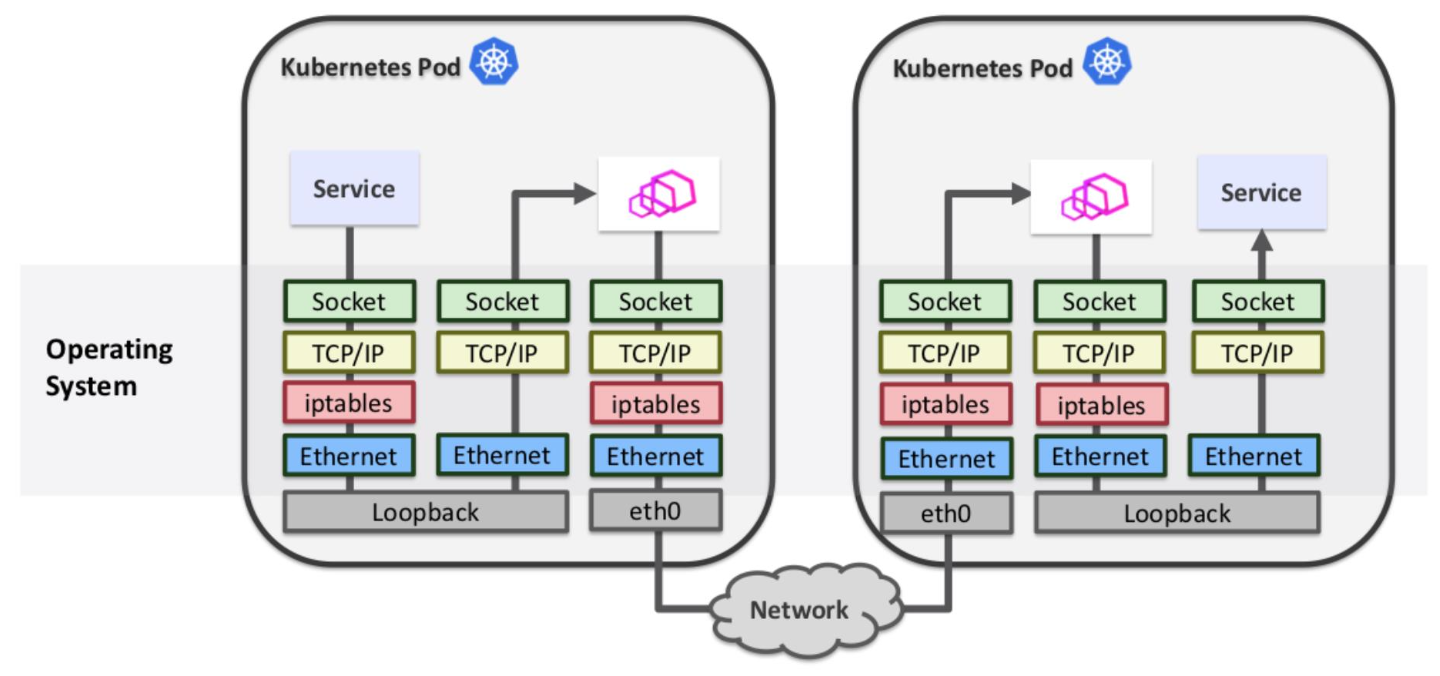

initContainer,执行istio-iptables命令,修改了容器内部的iptables规则

1 | apiVersion: v1 |

istio-proxy - sidecar; istio-init - initContainer

同一个 Pod 中的所有容器是共用网络 Namespace,所以 initContainer 能修改 iptables 规则并生效

1 | $ docker ps -a | grep nginx |

客户端

1 | kind: Deployment |

部署客户端,同样注入了 Sidecar

在没有 Sidecar 时的数据链路:

- 先对

Service做域名解析,CoreDNS告知ClusterIP- 请求被发送到

ClusterIP,到达宿主后,会有iptables/ipvs规则,通过DNAT指向PodIP

1 | $ k apply -f toolbox.yaml -n sidecar |

在有 Sidecar 时的数据链路

客户端,

iptables被修改,流量被劫持

1 | $ docker ps | grep toolbox |

往外走的流量,首先经过

OUTPUTChain

效果:任何往外走的TCP流量,都会转发到15001端口(即 Envoy 监听)

- -A OUTPUT -p tcp -j ISTIO_OUTPUT

- 是 TCP 协议则走

ISTIO_OUTPUT

- 是 TCP 协议则走

- ISTIO_OUTPUT 前面的规则都不匹配,直接到

ISTIO_REDIRECT - ISTIO_REDIRECT 转发到

15001端口

listener

1 | $ istioctl --help |

istioctl proxy-config -n sidecar listener toolbox-97948fc8-ptlct -ojson

routeConfigName- 80

1 | ... |

route

1 | $ istioctl proxy-config -n sidecar route toolbox-97948fc8-ptlct |

istioctl proxy-config -n sidecar route toolbox-97948fc8-ptlct –name=80 -ojson

cluster- outbound|80||nginx.sidecar.svc.cluster.local

1 | [ |

cluster

1 | $ istioctl proxy-config -n sidecar cluster toolbox-97948fc8-ptlct |

endpoint

1 | $ istioctl proxy-config -n sidecar endpoint toolbox-97948fc8-ptlct --cluster="outbound|80||nginx.sidecar.svc.cluster.local" |

Envoy Sidecar 会

服务发现当前 Kubernetes 集群的Service和Endpoint信息,并将其组装成Envoy配置

基于 Envoy Sidecar 的数据链路,不再依赖于宿主上的iptables/ipvs,在 Envoy Sidecar 中已经做好了路由判决

此时的流量要往 Nginx Pod 转发,同样要经过 iptables OUTPUT

useradd -m –uid1337

表明这是来自于 Envoy Sidecar 的 OUTPUT 流量,不会再进入到 ISTIO_REDIRECT,而是直接 RETURN,避免死循环

1 | $ docker images | grep istio/proxyv2 |

入站流量,先通过 PREROUTING

1 | $ docker ps | grep nginx |

效果:非特殊端口的 TCP 流量,转发到 15006 端口

- -A PREROUTING -p tcp -j ISTIO_INBOUND

- 任何 TCP 流量都会跳转到 ISTIO_INBOUND

- ISTIO_INBOUND 针对特定端口(15008、15090、15021、15020)不处理

- 80 端口会跳转到 ISTIO_IN_REDIRECT,进而转发到 15006 端口

listener

1 | $ istioctl proxy-config -n sidecar listener nginx-deployment-7fc7f5758-46mzh --port=15006 |

istioctl proxy-config -n sidecar listener nginx-deployment-7fc7f5758-46mzh -ojson

route -inbound|80||

1 | ... |

istioctl proxy-config -n sidecar route nginx-deployment-7fc7f5758-46mzh –name=80 -ojson

cluster -outbound|80||nginx.sidecar.svc.cluster.local

1 | [ |

istioctl proxy-config -n sidecar endpoint nginx-deployment-7fc7f5758-46mzh –cluster=”outbound|80||nginx.sidecar.svc.cluster.local” -ojson

1 | [ |

此时 Envoy Sidecar 准备执行 OUTPUT,与客户端类似,能标识为 Envoy Sidecar 的流量,最终到达实际的 Nginx 容器

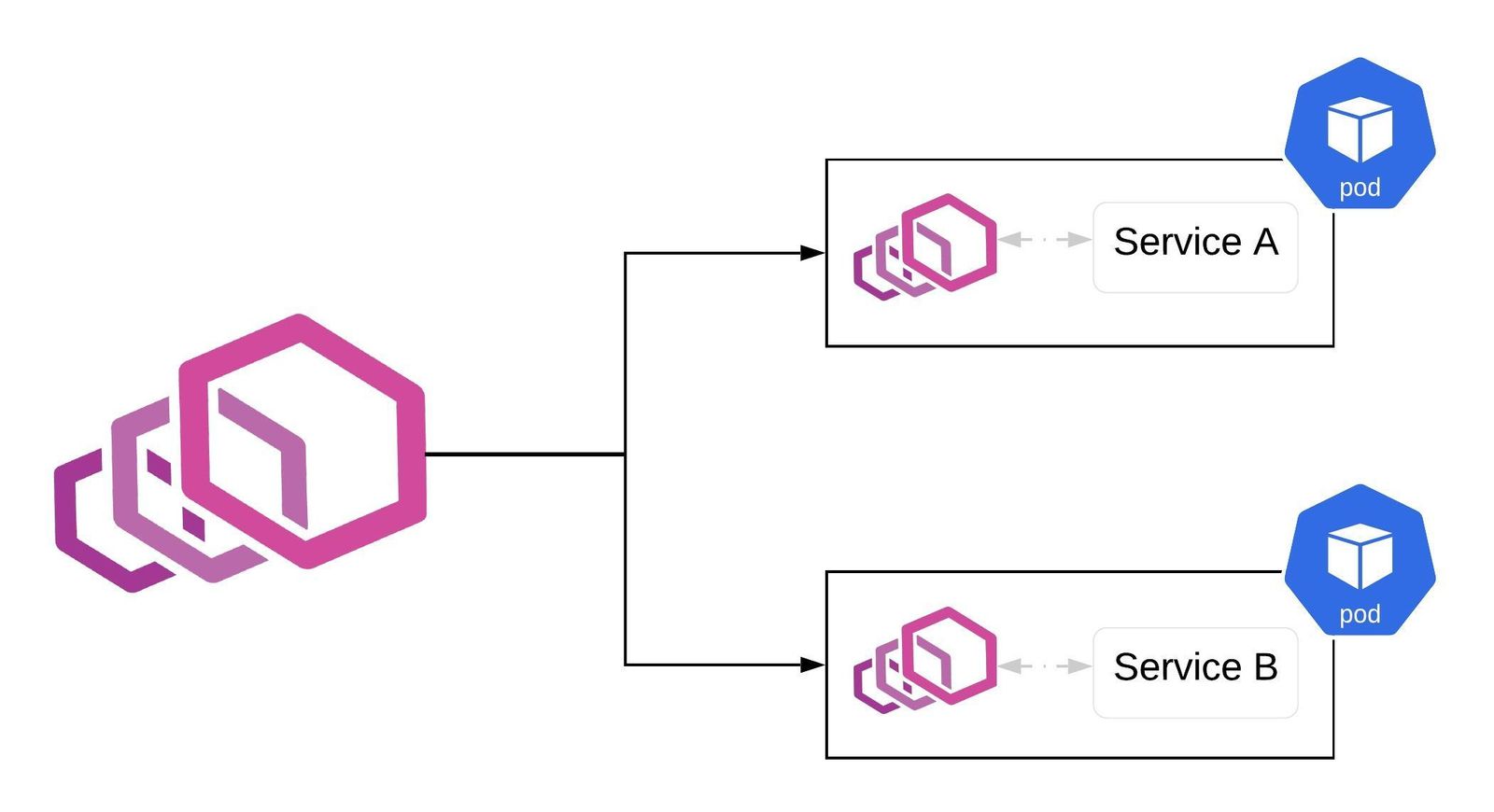

获取 Envoy Config(非常大),Istio 默认会服务发现

所有的 Service 和 Endpoint,对于大集群,存在性能隐患

1 | $ k exec -it -n sidecar toolbox-97948fc8-ptlct -- bash |

Envoy 只监听了

15001和15006,其内部配置的其余端口,并没有实际绑定 Socket

主要能力

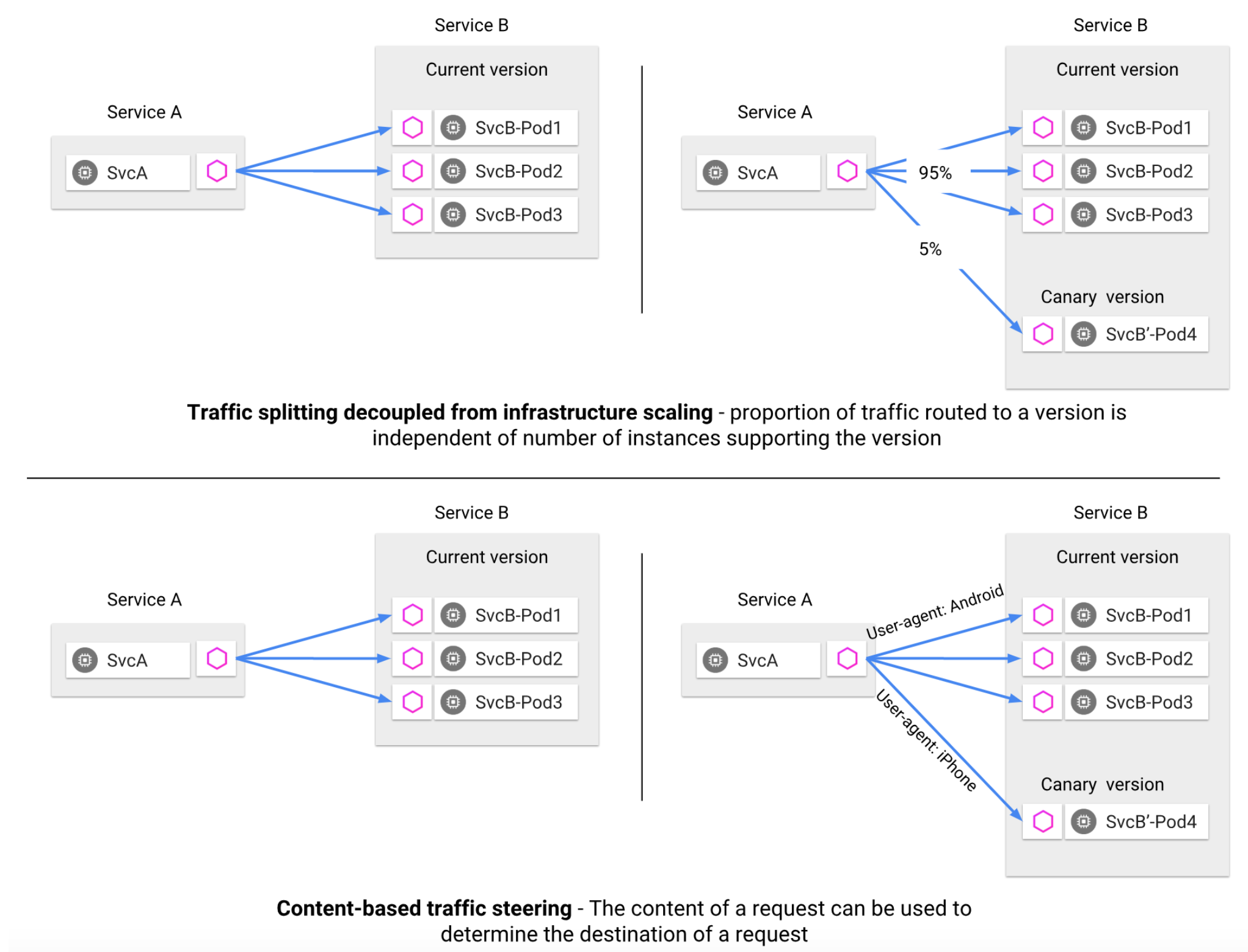

流量管理

服务通信

- 客户端不知道服务端不同版本之间的差异

- 客户端使用服务端的主机名或者 IP 继续访问服务端

- Envoy Sidecar

拦截并转发客户端与服务端之间的所有请求和相应

- Istio 为同一服务版本的多个实例提供

流量负载均衡 - Istio

不提供 DNS,应用程序尝试使 Kubernetes 的 DNS 服务来解析 FQDN

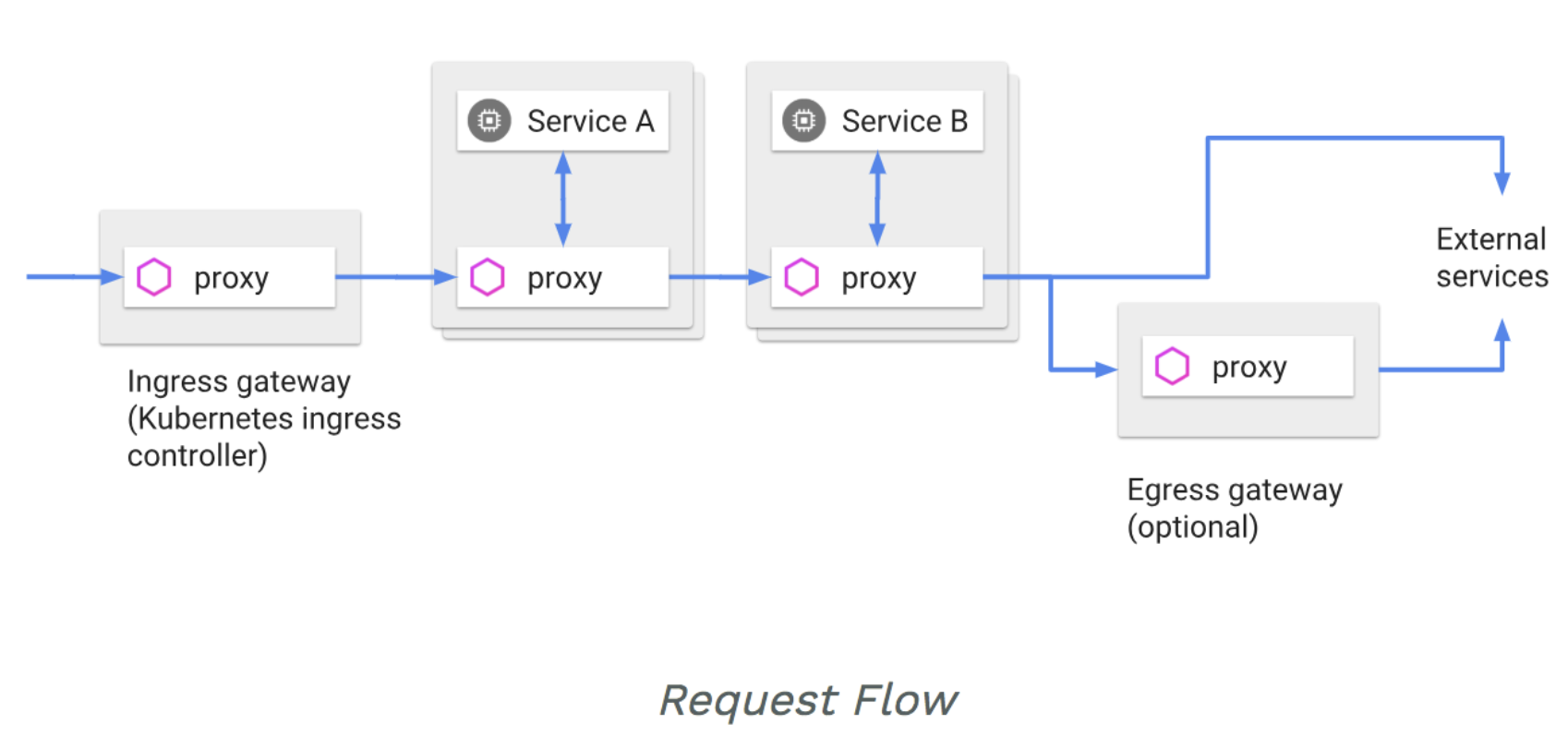

Ingress / Egress

Istio 假设进入和离开服务网格的

所有流量都会通过 Envoy 代理进行传输

- 将 Envoy 部署在服务之前,可以进行 A/B 测试、金丝雀部署

- 使用 Envoy 将流量路由到外部 Web 服务,并为这些服务添加超时控制、重试、断路器等功能,并获得各种指标

Ingress 本身并非成熟模型,无法完成

高级流量管理,Istio 通过一套模型统一:入站流量、东西向流量、出站流量

服务发现 / 负载均衡

- Istio 负载均衡服务网格中

实例之间的通信 - Istio 假设存在

服务注册表,用来跟踪服务的Pod/VM- 假设服务的新实例

自动注册到服务注册表,并且不健康的实例将会被自动删除

- 假设服务的新实例

- Pilot 使用

服务注册表的信息,并提供与平台无关的服务发现接口- 服务网格中的

Envoy实例执行服务发现,并相应地更新其负载均衡池

- 服务网格中的

- 服务网格中的服务使用

FQDN来访问其它服务- 服务的

所有 HTTP 流量都会通过Envoy自动重新路由 - Envoy 在

负载均衡池中的实例之间分发流量

- 服务的

- Envoy 支持复杂的负载均衡算法,但 Istio 仅支持:

轮询、随机、带权重的最少请求 - Envoy 还会

定期检查负载均衡池中每个实例的运行状况- Envoy 遵循

熔断器风格模式,根据健康检查 API 调用的失败率将实例分为不健康和健康- 当实例的健康检查

失败次数超过阈值,将被从负载均衡池中弹出 - 当实例的健康检查

成功次数超过阈值,将被添加到负载均衡池

- 当实例的健康检查

- 如果实例响应

HTTP 503,将立即弹出

- Envoy 遵循

故障处理

超时处理- 基于超时预算的

重试机制 - 基于并发连接和请求的

流量控制 - 对负载均衡器成员的

健康检查 - 细粒度的

熔断机制

配置微调

- Istio 可以为每个服务设置

全局默认值 - 客户端可以通过特殊的 HTTP 头

覆盖超时和重试的默认值- x-envoy-upstream-rq-timeout-ms

- x-envoy-max-retries

故障注入

- Istio 允许在

网络层按协议注入错误 - 注入的错误可以

基于特定的条件,也可以设置出现错误的比例Delay- 提高网络延迟Aborts- 直接返回特定的错误码

规则配置

| Key | Value |

|---|---|

VirtualService |

在 Istio 中定义路由规则,控制如何路由到服务上 |

DestinationRule |

VirtualService 路由生效后,配置应用与请求的策略集 |

ServiceEntry |

在 Istio 之外启用对服务的请求 |

Gateway |

为 HTTP/TCP 流量配置负载均衡器 常在服务网格边缘,启用应用程序的入口流量 |

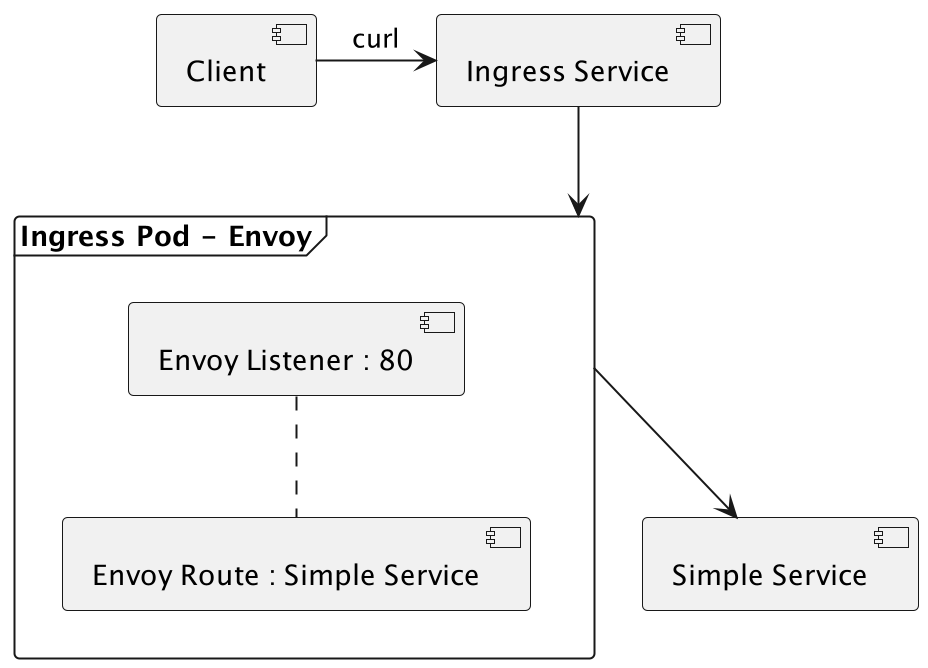

Ingress

创建 namespace

1 | $ k create ns simple |

Deployment + Service

1 | apiVersion: apps/v1 |

1 | $ k apply -f simple.yaml -n simple |

通过 Gateway 将服务发布到集群外

1 | apiVersion: networking.istio.io/v1beta1 |

VirtualService- 对应Envoy Route- host 为 simple.zhongmingmao.io 且端口为 80 的请求,将被转发到 simple.simple.svc.cluster.local:80

- gateways - simple,与 Gateway 产生关联

Gateway- 对应Envoy Listeneristio: ingressgateway实际选中的是 istio-ingressgateway-779c475c44-j4tds- 语义:往 istio-ingressgateway 的 Pod 插入一些规则

- 在 ingressgateway 上监听 http://simple.zhongmingmao.io:80

istio-ingressgateway-779c475c44-j4tds 带有

istio=ingressgateway,本质也是一个 Envoy

1 | $ k get po -n istio-system --show-labels |

应用 VirtualService 和 Gateway

1 | $ k apply -f istio-specs.yaml -n simple |

细化七层路由规则

1 | apiVersion: networking.istio.io/v1beta1 |

1 | $ k apply -f istio-specs.yaml -n simple |

HTTPS

创建 namespace

1 | $ k create ns securesvc |

Deployment + Service

1 | apiVersion: apps/v1 |

1 | $ k create -f httpserver.yaml -n securesvc |

生成证书,需要放到

istio-system下,Istio 监听 443 的时候需要 TLS

1 | $ openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -subj '/O=zhongmingmao Inc./CN=*.zhongmingmao.io' -keyout zhongmingmao.io.key -out zhongmingmao.io.crt |

VirtualService + Gateway

1 | apiVersion: networking.istio.io/v1beta1 |

1 | $ k apply -f istio-specs.yaml -n securesvc |

对于 HTTPS,一个 443 端口,可能支持了很多域名,需要绑定很多证书

请求借助 Server Name Indication 机制(–resolve,新增 DNS 缓存,相当于修改 /etc/hosts)

1 | $ curl --resolve httpsserver.zhongmingmao.io:443:10.108.4.104 https://httpsserver.zhongmingmao.io -v -k |

Canary

创建 namespace

1 | $ k create ns canary |

Canary V1

1 | apiVersion: apps/v1 |

1 | $ k apply -f canary-v1.yaml -n canary |

Client

1 | apiVersion: apps/v1 |

1 | $ k apply -f toolbox.yaml -n canary |

Canary V2

1 | apiVersion: apps/v1 |

1 | $ k apply -f canary-v2.yaml -n canary |

VirtualService + DestinationRule

1 | apiVersion: networking.istio.io/v1beta1 |

访问 Canary V2

1 | $ k apply -f istio-specs.yaml -n canary |

访问 Canary V1

1 | $ k exec -n canary toolbox-97948fc8-ptlct -- curl -sI canary |

故障注入

1 | apiVersion: networking.istio.io/v1beta1 |

5.755 s ≈ 6 s

1 | $ k apply -f istio-specs.yaml -n canary |

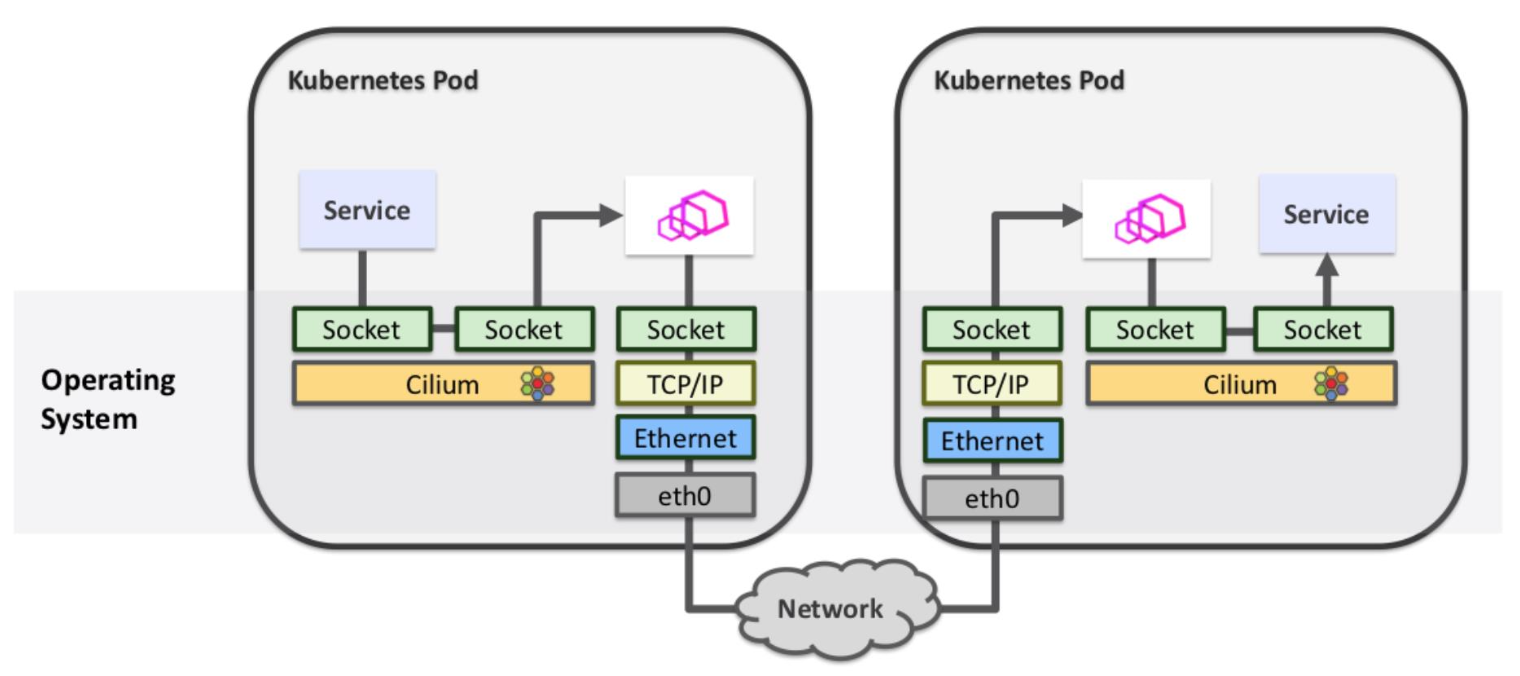

网络栈

iptables/ipvs 基于 netfilter 框架(内核协议栈),优化空间很小

Cilium 基于 eBPF

- 新版 Linux Kernel 提供了很多的 Hook,允许插入自定义程序(并非规则)

- 对 Linux Kernel 非常熟悉,编写 C 代码,编译成符合 eBPF 要求的机器码后

- 最后通过 eBPF 的用户态程序加载到 Linux Kernel

- Cilium 充分利用了 Hook

- 建立 TCP 连接依然会走内核协议栈,依然有开销

- 但

数据拷贝,可以直接在用户态进行 Socket 对拷,不需要再经过内核协议栈