DevOps - Foundation

基本原理

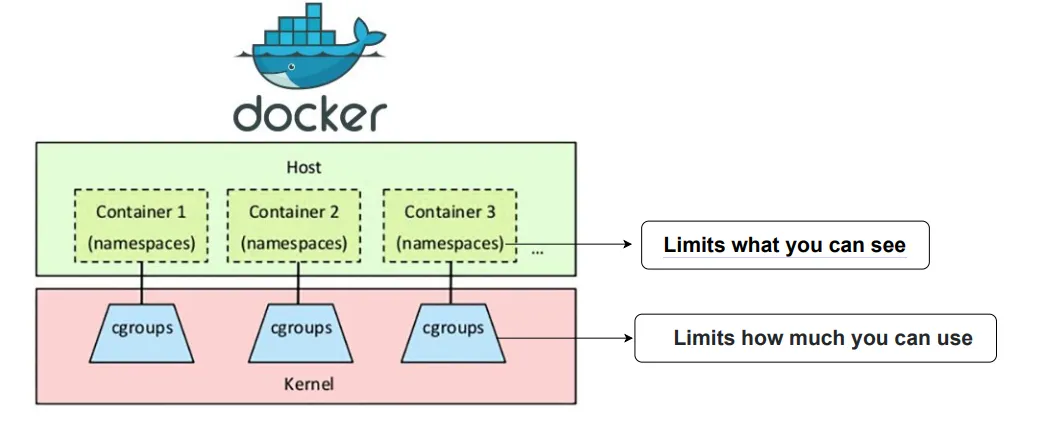

核心原理

隔离机制

运行 Nginx 镜像

1 | $ docker run -d nginx:latest |

获取容器在宿主上的 PID

1 | $ docker inspect --format '{{.State.Pid}}' d821171713e9 |

查看进程详情

1 | $ ps -aux | grep 10909 |

获取进程所有 namespace 类型

1 | $ lsns --task 10909 |

进入 filesystem namespace(无需 docker daemon)

1 | $ hostname |

修改容器主机名,会同时修改宿主主机名,因为并没有进入 utc namespace

1 | $ nsenter --target 10909 --mount bash |

进入所有 namespace,再次修改容器主机名,不会修改宿主主机名

1 | $ nsenter --target 10909 --all bash |

实现容器

uts

1 | package main |

实现了对 uts namespace 的隔离

1 | $ hostname |

pid

1 | package main |

模拟 rootfs

1 | $ dpu alpine:3.19.1 |

运行 Go 程序

1 | $ go run main.go run /bin/sh |

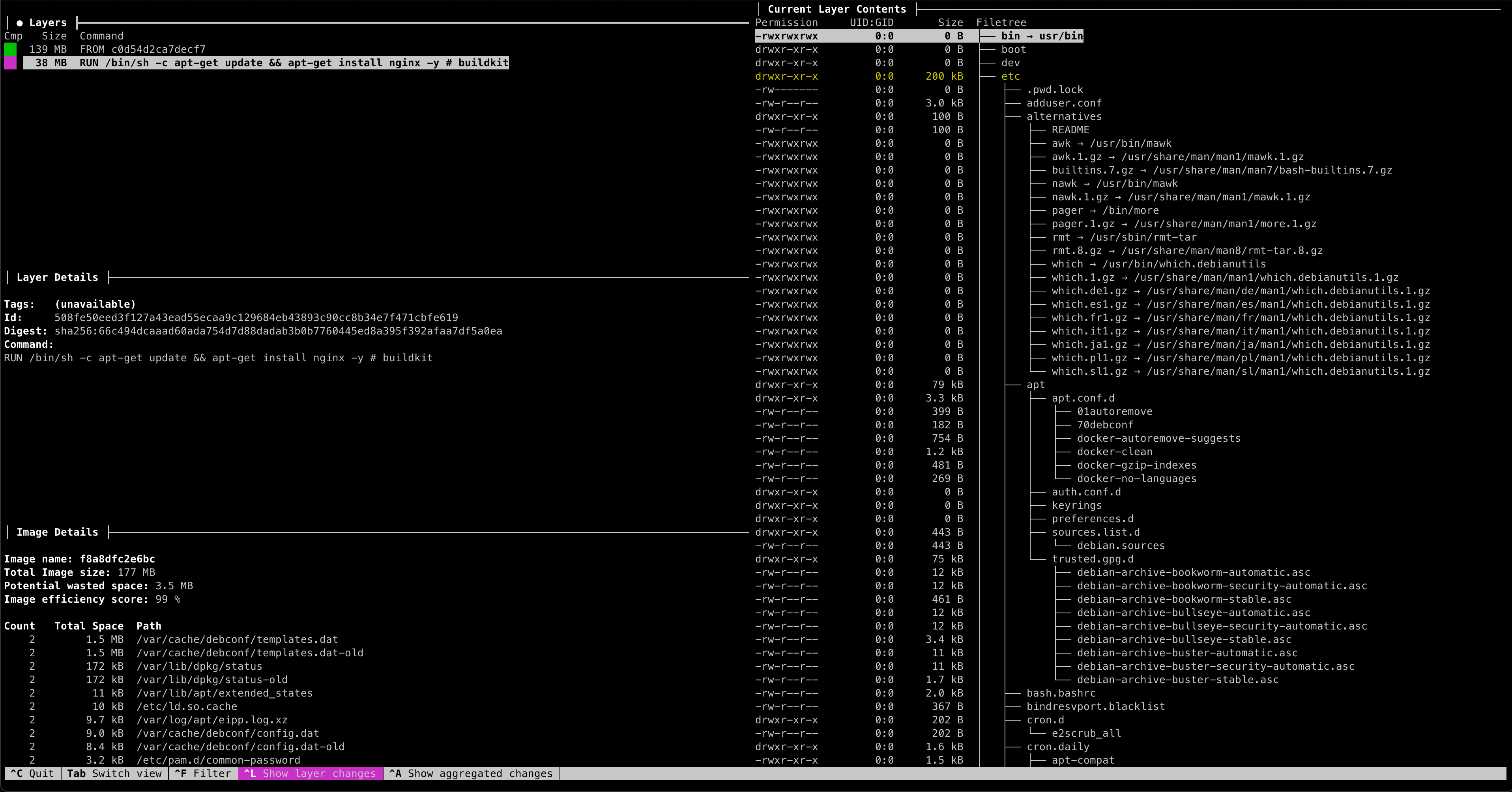

Image

总共 2 层 Layer(FROM + RUN),Layer 名称是以 Layer

内容的hash值命名

1 | FROM debian:latest |

1 | $ docker build -t nginx:v1 -f Dockerfile . |

1 | $ dils |

repositories

1 | { |

oci-layout

1 | { |

manifest.json

1 | [ |

index.json

1 | { |

Overlay

lower_dir 为

只读层,upper_dir 为读写层,merged_dir 为用户最终看到的目录,work_dir 存储中间结果

1 | $ tree |

1 | $ mount -t overlay -o lowerdir=lower_dir/,upperdir=upper_dir/,workdir=work_dir/ none merged_dir/ |

同名文件会被上层 Layer 覆盖

1 | $ cat lower_dir/same.txt |

写时复制(上层 Layer)

1 | $ cat lower_dir/1.txt | wc -l |

标记删除(上层 Layer)

1 | $ rm -rf merged_dir/2.txt |

Docker Image

1 | $ dils |

Dockerfile

命令

ENTRYPOINT / CMD

- ENTRYPOINT 默认为

/bin/sh -c,因此 CMD 为一个可执行文件时,能运行 - ENTRYPOINT + CMD

ADD / COPY

- 优选

COPY,语义更清晰

最佳实践

- 减少 Layer

层数 - 将

经常发生变化的 Layer 层移后,尽量利用构建缓存 - 使用

.dockerignore - 减少镜像

体积- 基础镜像:

alpine、slim - 使用

多阶段构建

- 基础镜像:

- 安全

镜像

慎用 alpine 镜像

| Tag | Size | Note |

|---|---|---|

| latest | 381.88 MB | gnu c library |

| slim | 70.28 MB | gnu c library |

| alpine | 47.02 MB | musl libc |

1 | package main |

1 | # step 1 |

go build默认是动态链接,会找不到动态链接库

1 | $ docker build -t go-alpine:v1 -f Dockerfile . --no-cache |

静态链接:

-tags netgo -ldflags '-extldflags "-static"'

1 | # step 1 |

1 | $ docker build -t go-alpine:v2 -f Dockerfile . --no-cache |

静态链接:

CGO_ENABLE=0

1 | # step 1 |

1 | $ docker build -t go-alpine:v3 -f Dockerfile . --no-cache |

统一都使用 alpine 镜像

1 | # step 1 |

1 | $ docker build -t go-alpine:v4 -f Dockerfile . --no-cache |

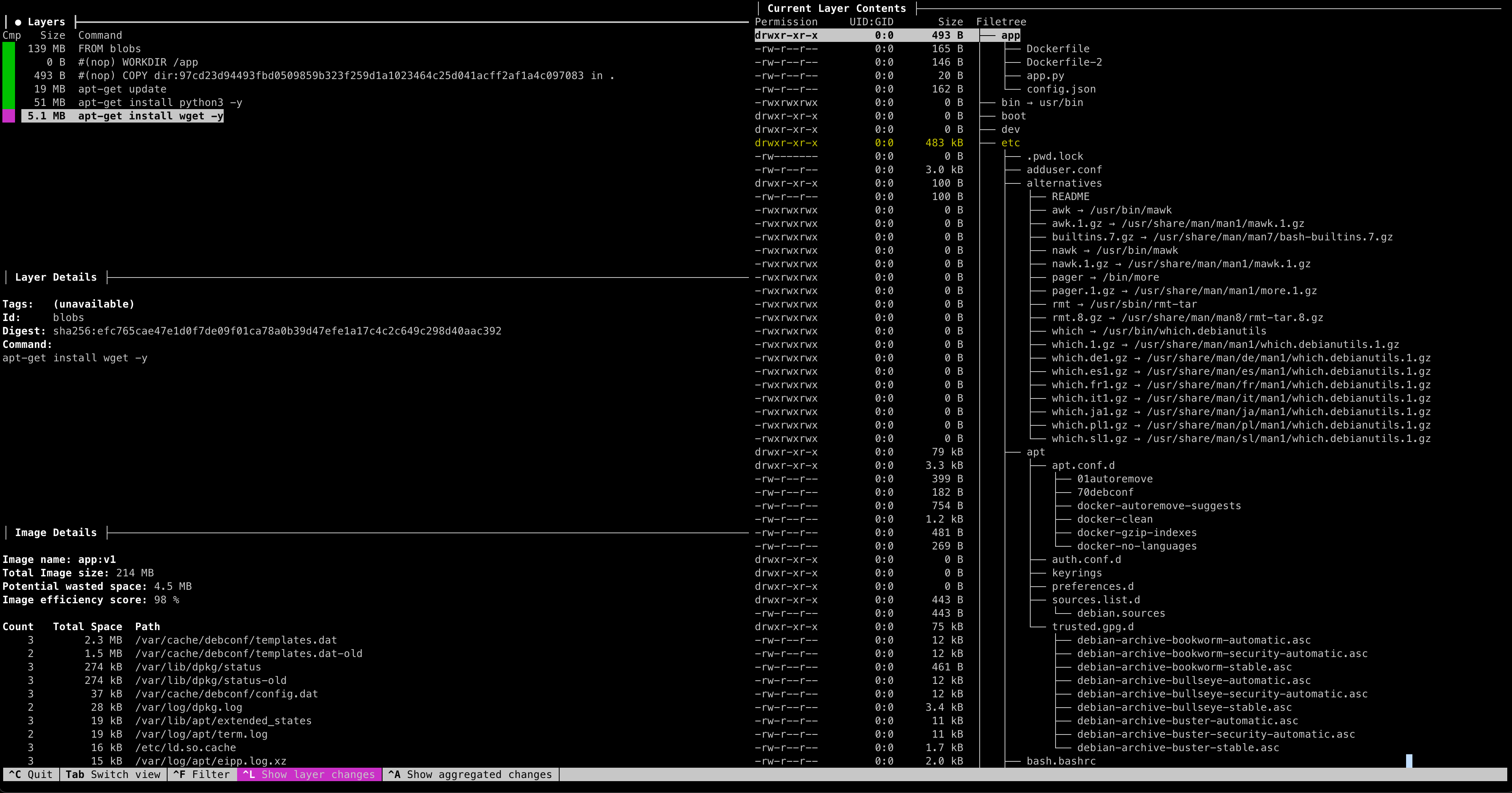

多阶段构建

主要用途:

减少镜像大小

单阶段

1 | FROM golang:1.17 |

1 | $ docker build -t app:v1 -f Dockerfile . |

多阶段

1 | # step 1 |

1 | $ docker build -t app:v2 -f Dockerfile-multi-stage . |

安全

避免使用

root用户

1 | FROM ubuntu:latest |

1 | $ docker build -t user:v1 -f Dockerfile . |

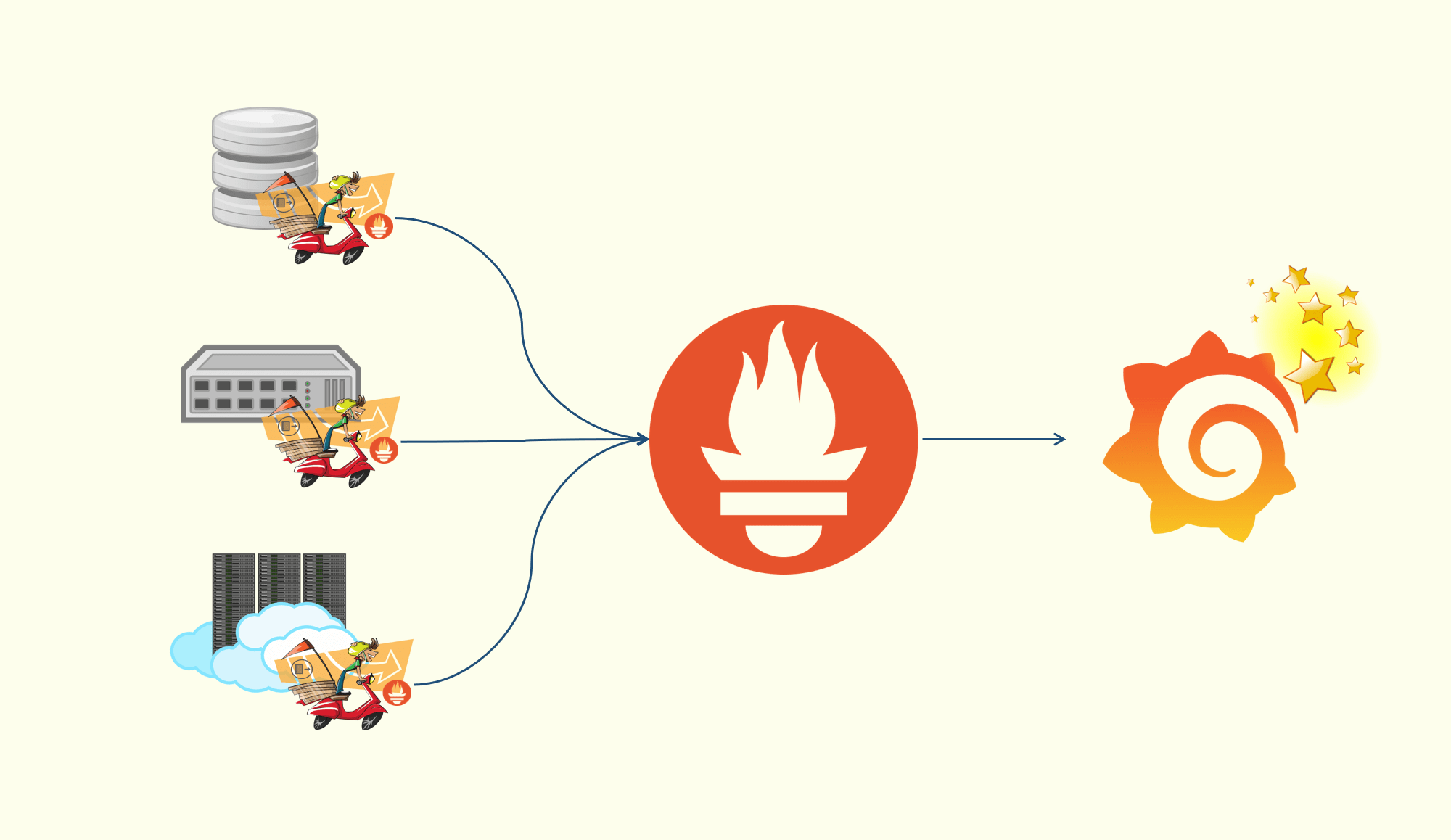

镜像构建

DinD

不安全

- docker build 依赖于

Docker Daemon - DinD - 将宿主上的

Docker Socket(权限很高) 挂载到容器,将宿主的权限暴露到容器中

1 | $ docker run -it -v /var/run/docker.sock:/var/run/docker.sock docker:dind docker -v |

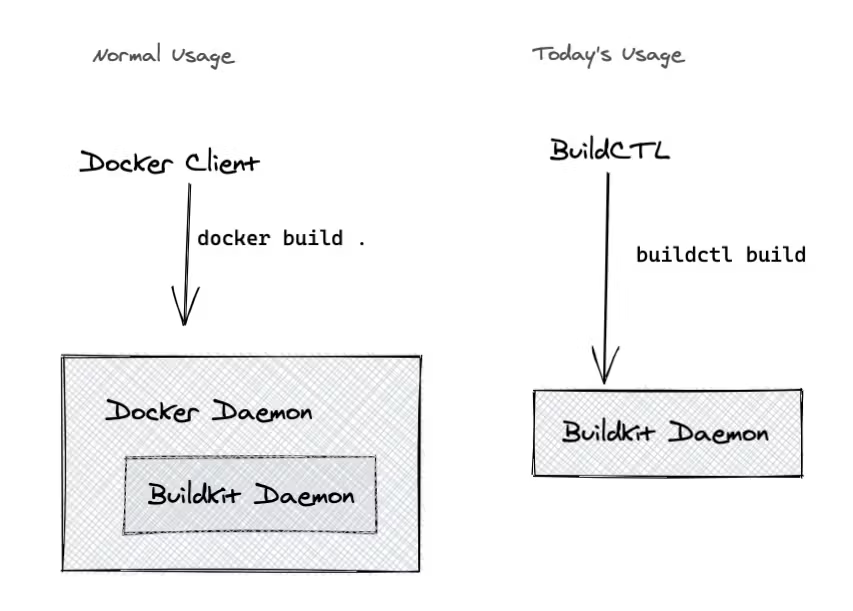

BuildKit

buildx:内置在 Docker 的默认构建工具,效率远高于原始的 Docker 构建- 不依赖于 Docker Daemon,但依赖于

Buildkit Daemon(可以直接部署在容器中)

启动 buildkitd 容器(用于构建镜像)

1 | $ docker run -d --name buildkitd --privileged moby/buildkit:rootless |

构建镜像(指定

构建上下文,并传递到 buildkitd 容器)

1 | $ buildctl --addr docker-container://buildkitd build \ |

| Option | Desc |

|---|---|

| –addr | buildkitd address (default: “unix:///run/buildkit/buildkitd.sock”) |

Kaniko

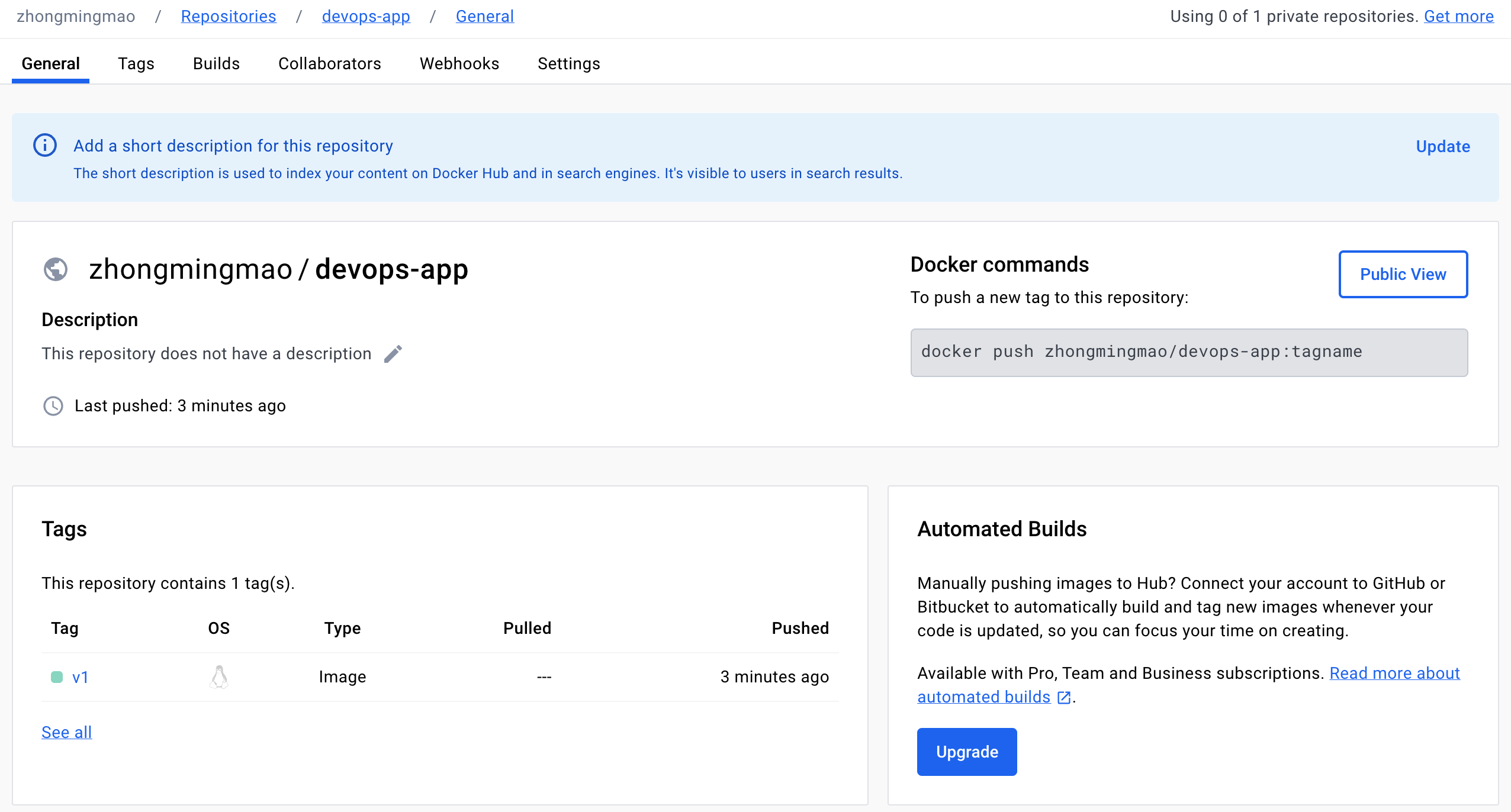

示例

Kaniko 用于推送镜像的凭证

1 | $ echo -n 'zhongmingmao:${docker_hub_access_token}' | base64 |

1 | { |

1 | FROM debian:latest |

1 | print("Hello World") |

1 | $ tree |

缺点

- 不支持

跨平台构建(没有虚拟化) 构建速度较慢缓存效率相对于本地缓存更低,一般需要借助网络和Repository资源消耗比较多

构建原理

传统构建

串行构建,效率低

1 | FROM debian:latest |

1 | print("Hello World") |

禁用 BuildKit

DOCKER_BUILDKIT=0

1 | $ DOCKER_BUILDKIT=0 docker build -t app:v1 . --no-cache |

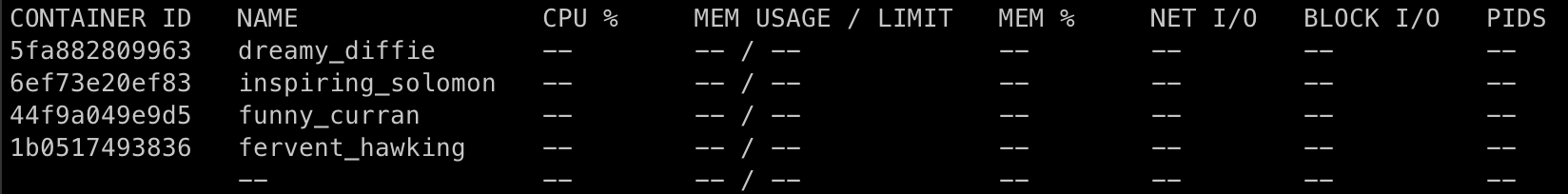

监控 Docker 资源消耗 -

串行创建容器

1 | $ docker stats -a |

查看历史,存在很多

中间镜像

1 | $ dils |

构建过程(

串行)

- 首先通过

docker run基于基础镜像启动第一个中间容器 - 在中间容器中执行 Dockerfile 中的第一条构建命令

- 最后通过

docker commit将中间容器的读写层提交为镜像只读层 - 执行下一条构建命令

总共 6 层 Layer

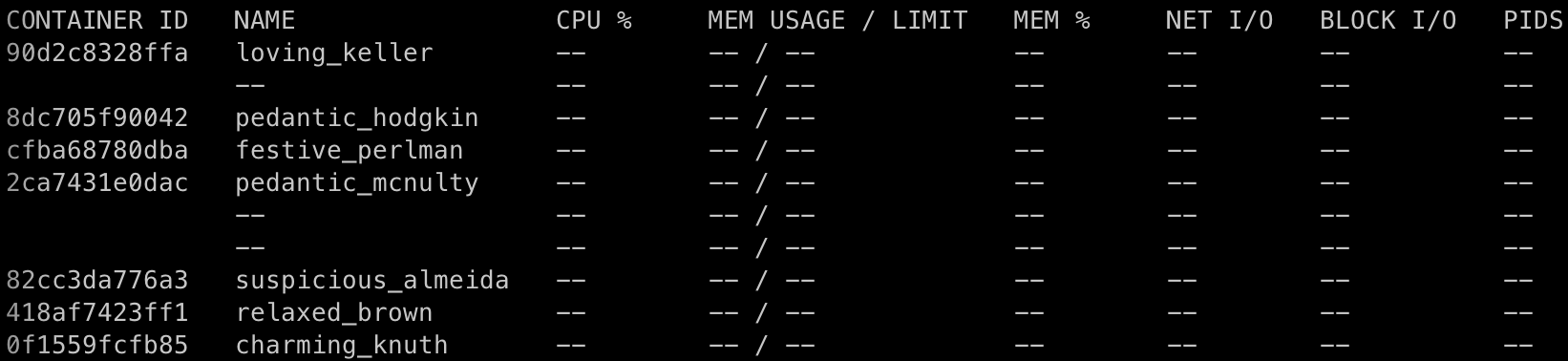

BuildKit

多阶段构建

1 | FROM alpine As kubectl |

传统构建

串行(Dockerfile 总共 17 行,扫描到 15 个 Step,依次执行)

1 | $ DOCKER_BUILDKIT=0 docker build -t app:v2.1 . --no-cache |

1 | $ dils |

BuildKit

并行(借助DAG依赖分析,terraform 和 kubectl 没有依赖关系,可以并行)

1 | $ docker build -t app:v2.2 . --no-cache |

1 | $ dils |

通过

docker stats -a观察,不会产生新的中间容器

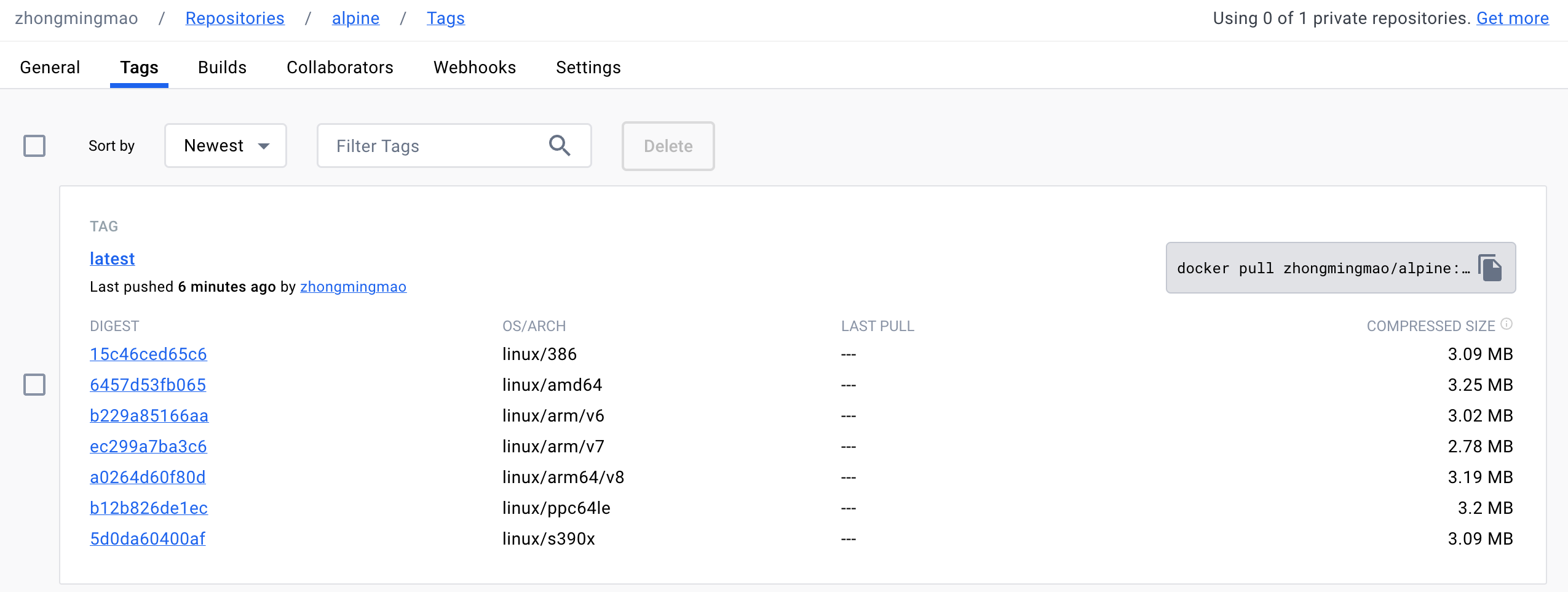

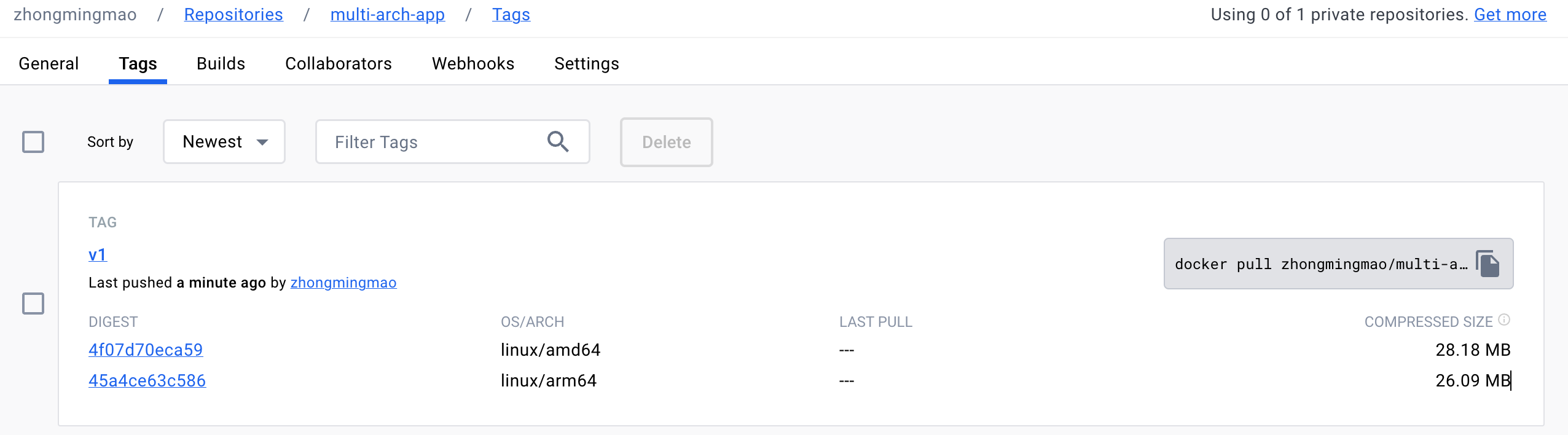

多架构

crane

查看镜像的 Manifest

1 | $ crane manifest alpine:latest | jq |

查看镜像某一架构的 Manifest

1 | $ crane manifest alpine:latest@sha256:6457d53fb065d6f250e1504b9bc42d5b6c65941d57532c072d929dd0628977d0 | jq |

模拟镜像拉取过程(

Manifest -> Image -> Layer)

1 | $ crane export -v alpine:latest - | tar xv |

1 | $ ls |

复制镜像(所有架构)

1 | $ crane cp alpine:latest index.docker.io/zhongmingmao/alpine:latest |

1 | $ crane manifest zhongmingmao/alpine:latest | jq |

查看镜像的所有 Tag

1 | $ crane ls zhongmingmao/alpine |

BuildKit

基于

qemu虚拟化技术

1 | $ builder=builderx |

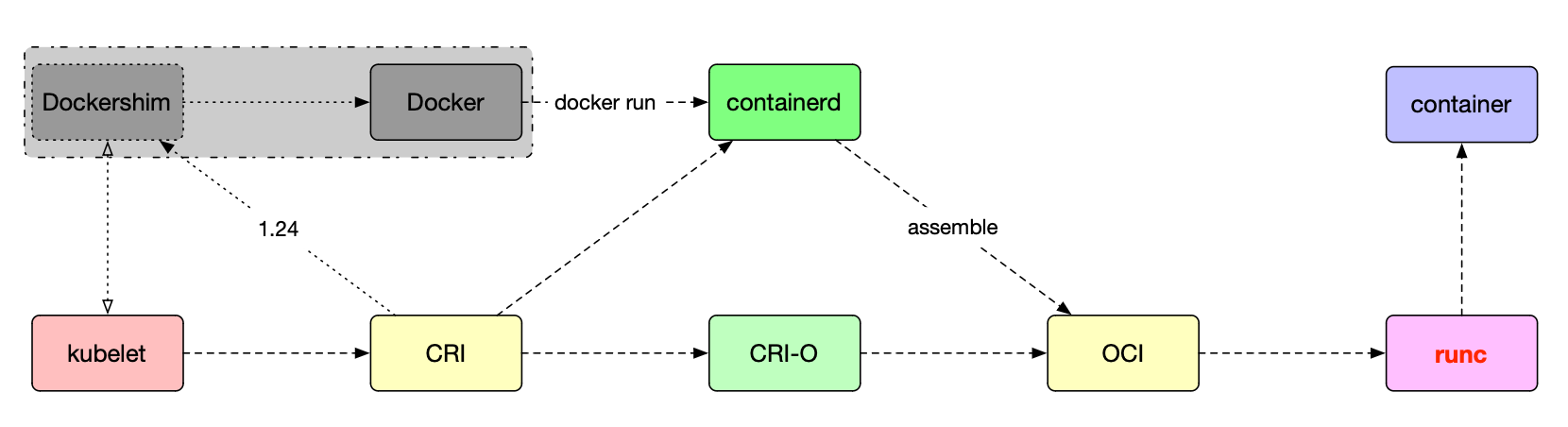

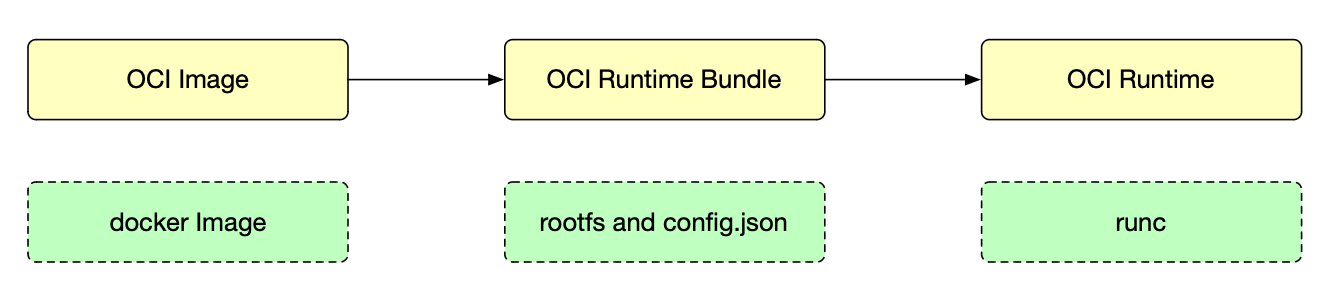

OCI

OCI -

Open Container Initiative

runc

runc -

Open Container Initiative runtime

runc is a command line client for running applications packaged according to

the Open Container Initiative (OCI) format and is a compliant implementation of the

Open Container Initiative specification.

Containers are configured using

bundles. A bundle for a container is a directory

that includes a specification file named “config.json“ and aroot filesystem.

The root filesystem contains the contents of the container.

rootfs

1 | $ mkdir /tmp/rootfs |

1 | $ arch |

config.json

1 | $ cd /tmp/ |

1 | { |

run

启动容器

1 | $ runc run runc-app |

查看容器

1 | $ runc list |

Manifest

| Type | Item |

|---|---|

| Workload | Deployment |

| StatefulSet | |

| DaemonSet | |

| Job | |

| CronJob | |

| Service | ClusterIP |

| NodePort | |

| Loadbalancer | |

| Headless - StatefulSet | |

| Ingress | |

| Config | ConfigMap |

| Secret | |

| HPA | |

| Storage | StorageClass |

| PV / PVC |

kubectl create

使用 kubectl create 命令来生成 YAML 模板

1 | $ k create deployment nginx --image=nginx -oyaml --dry-run=client |

1 | $ k create service clusterip my-cs --tcp=5678:8080 -oyaml --dry-run=client |

1 | $ k create configmap my-cm --from-literal=name=zhongmingmao --from-literal=city=gz -oyaml --dry-run=client |

1 | $ k create configmap --help |

文件合并

1 | apiVersion: v1 |

挪威问题

1 | apiVersion: v1 |

1 | y|Y|yes|Yes|YES|n|N|no|No|NO |

不确定时,用

双引号包裹

保留关键字

保留关键字不能作为

Key

1 | apiVersion: v1 |

数字

1 | apiVersion: v1 |

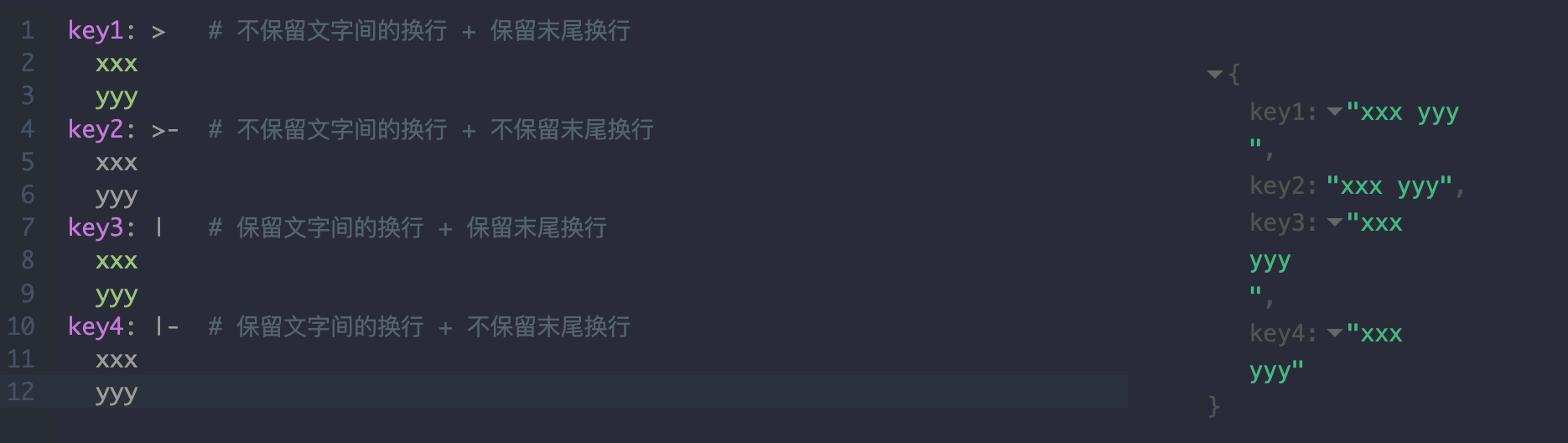

多行内容

1 | key1: > # 不保留文字间的换行 + 保留末尾换行 |

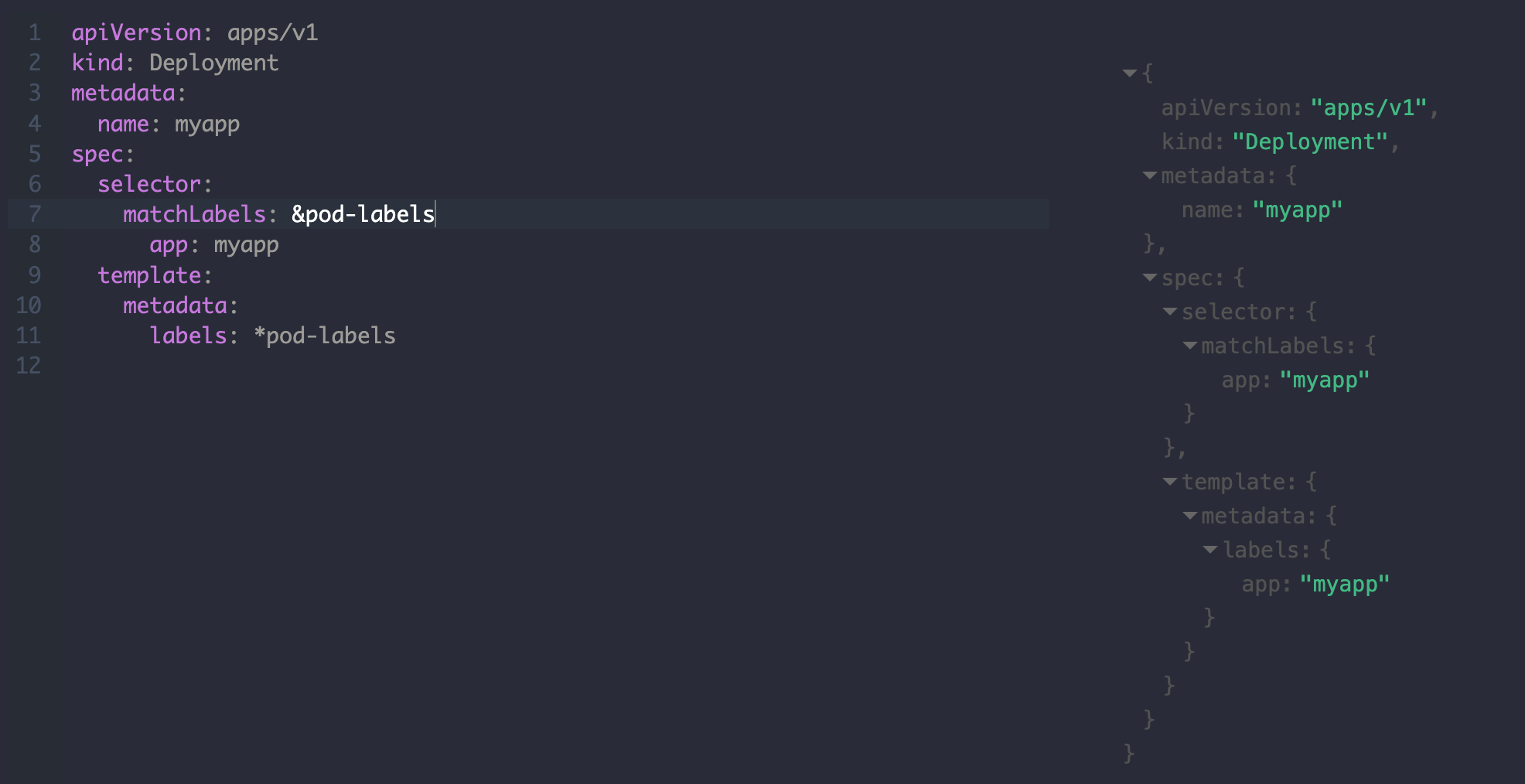

引用

比较少用

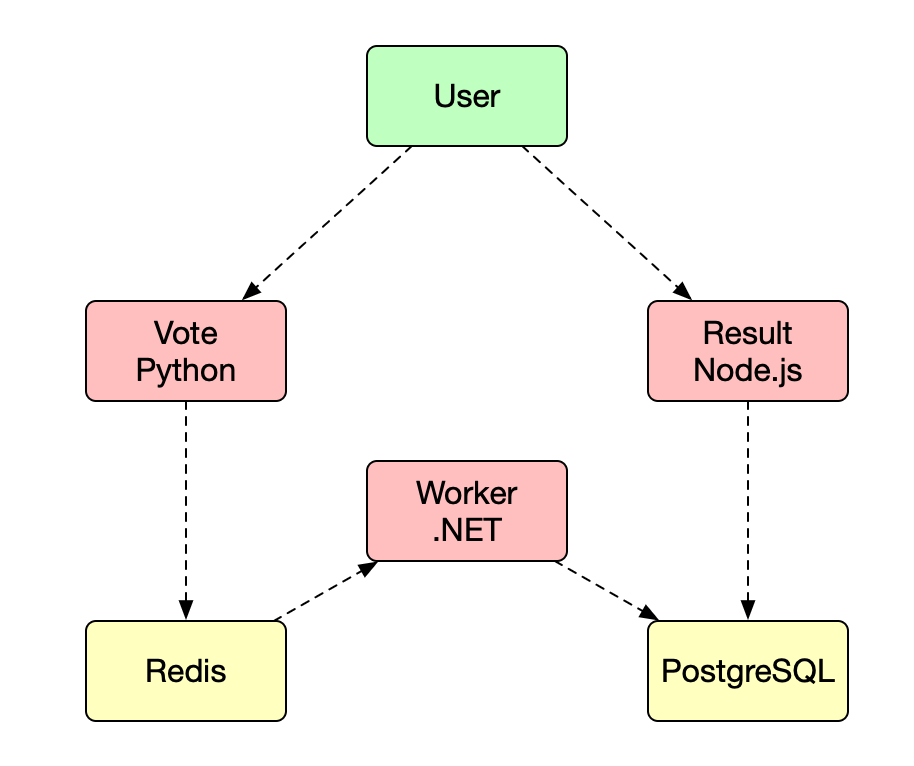

微服务

架构

Manifest

目录

1 | $ tree |

部署

1 | $ k --kubeconfig ~/.kube/devops-camp apply -f k8s-specifications |

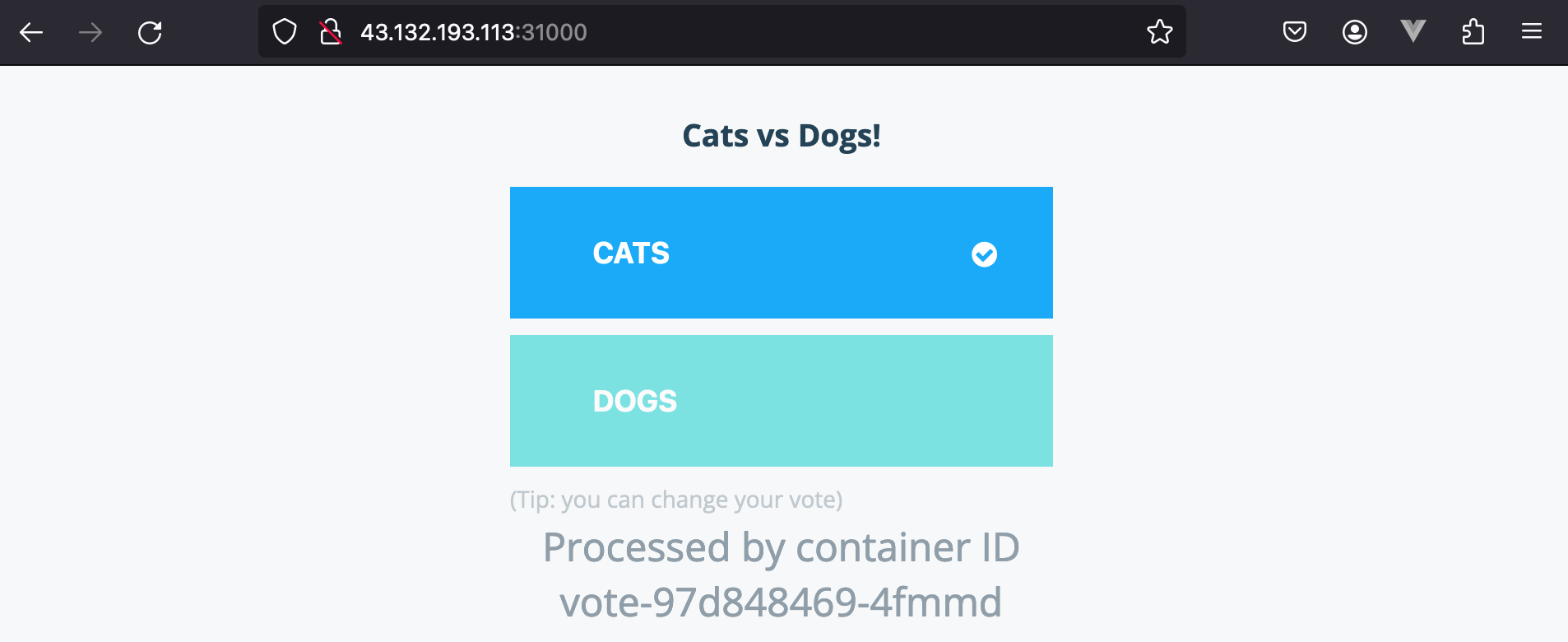

投票

实现

Vote

1 |

|

Worker

1 | while (true) |

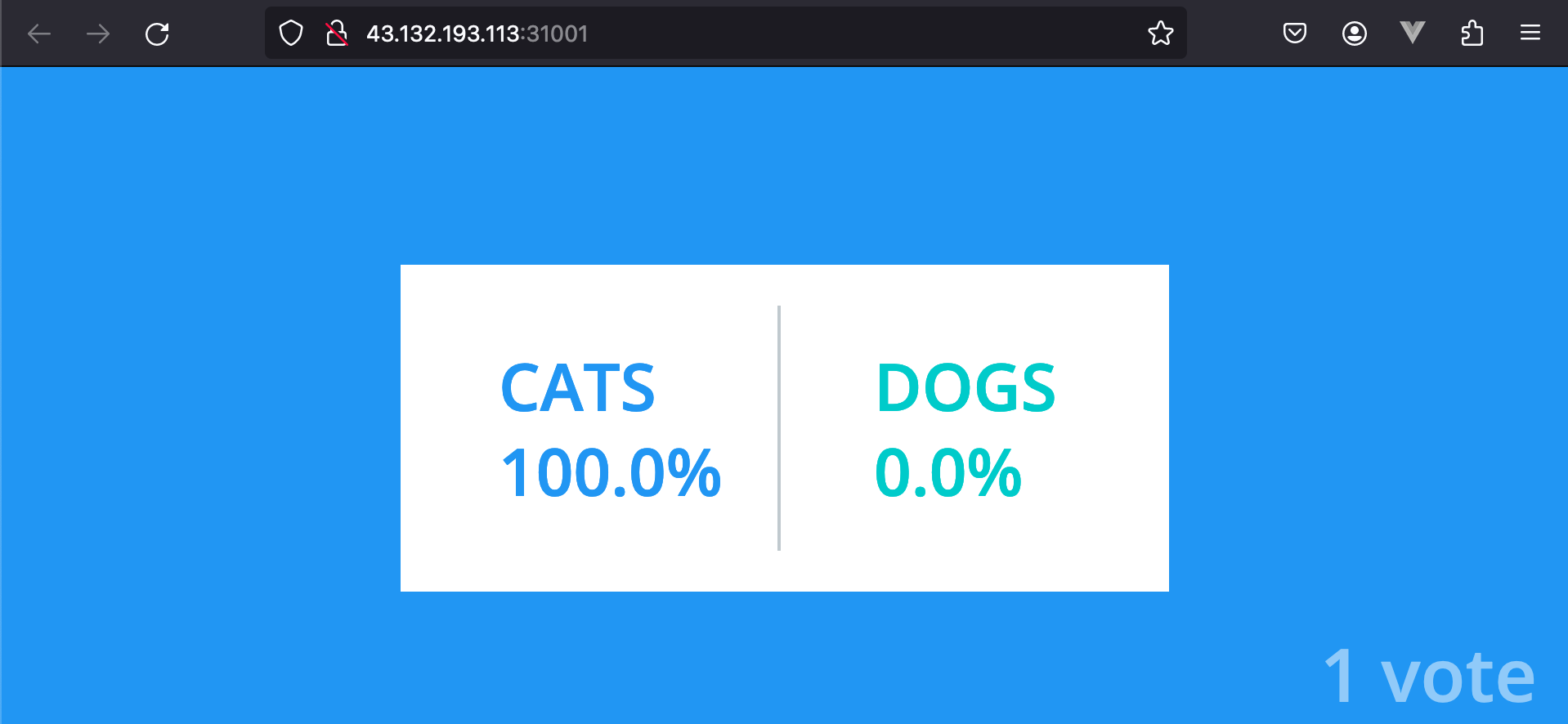

Result

1 | async.retry( |

Dockerfile

对于 node.js,使用

tini作为 1 号进程,否则会产生很多僵尸进程

1 | FROM node:18-slim |

服务依赖

- 通过

initContainers使用k8s-wait-for来控制 Pod 的启动顺序- 基于

指数退让,大规模应用集合的启动时间可能会很久

1 | kind: StatefulSet |

数据初始化

- 业务代码负责初始化

- 通过

K8S Job进行初始化

Helm

动态 Manifests

- 管理 K8S 对象

- 将多个微服务的工作负载、配置对象等

封装成一个应用 - 屏蔽终端用户使用的复杂度

参数化、模板化,支持多环境

Helm Chart

核心概念

| Concepts | Desc |

|---|---|

Chart |

K8S 应用安装包,包含应用的 K8S 对象,用于创建实例 |

Release |

使用默认或者特定参数安装的 Helm 实例(运行中的实例) |

Repository |

用于存储和分发 Helm Chart 仓库(Git、OCI) |

常用命令

| Command | Desc |

|---|---|

| install | 安装 Helm Chart |

uninstall |

卸载 Helm Chart,不会删除 PVC 和 PV |

| get / status / list | 获取 Helm Release 信息(存储在 K8S Secret 中) |

| repo add / list / remove / index | Repository 相关命令 |

| search | 在 Repository 中查找 Helm Chart |

create / package |

创建和打包 Helm Chart |

pull |

拉取 Helm Chart |

Scratch

创建 Helm Chart

templates 为 manifests 模板,charts 为依赖的子 Chart,helm dependency build会拉取远端仓库的 Chart

1 | $ h create demo |

Chart.yaml

1 | apiVersion: v2 |

| Key | Desc |

|---|---|

| apiVersion | API 版本,默认 v2 |

| name | Helm Chart 名称 |

| type | application / library |

| version | Helm Chart 版本 |

| appVersion | 应用版本 |

dependencies |

依赖其它子 Chart |

values.yaml

1 | # Default values for demo. |

templates/deployment.yaml

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"imagePullPolicy: {{ .Values.image.pullPolicy }}

1 | apiVersion: apps/v1 |

本地渲染:

Render chart templates locally and display the output.

1 | $ h template . |

安装(等价)

h install .h template . | k apply -f -

调试:inspect

1 | $ h inspect values oci://registry-1.docker.io/bitnamicharts/redis |

Dependency

Chart.yaml

1 | apiVersion: v2 |

values.yaml

1 | # 父 Chart 覆写子 Chart 的默认值 |

helm dependency build 会

拉取远端仓库的 Chart

1 | $ helm dependency build |

高级技术

Dependency基于Subcharts(放在charts目录) 来实现

| Key | Link |

|---|---|

| Built-in Objects | https://helm.sh/docs/chart_template_guide/builtin_objects/ |

| Template Function List | https://helm.sh/docs/chart_template_guide/function_list/ |

| Helm Dependency | https://helm.sh/docs/helm/helm_dependency/ |

| Debugging Templates | https://helm.sh/docs/chart_template_guide/debugging/ |

| Subcharts and Global Values | https://helm.sh/docs/chart_template_guide/subcharts_and_globals/ |

| Chart Hooks | https://helm.sh/docs/topics/charts_hooks/ |

pre-install- 同样可以执行数据库的初始化

1 | apiVersion: batch/v1 |

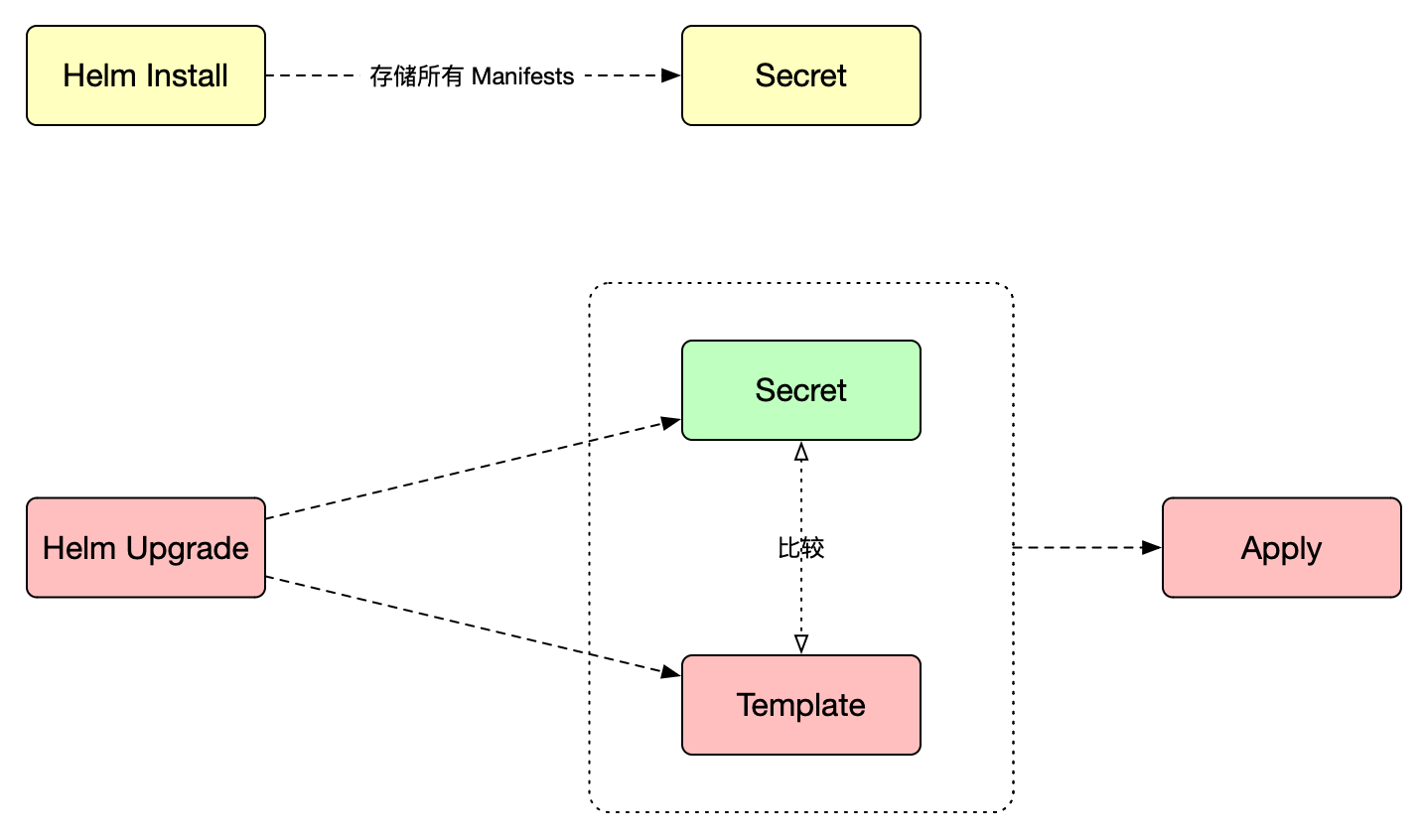

Helm Upgrade

Helm Cheat Sheet

安装或者升级

1 | $ helm upgrade --install <release-name> --values <values file> <chart directory> |

查看 Helm Chart values.yaml 配置信息,主要用于观测 Subcharts

1 | $ helm inspect values <CHART> |

查看 Helm Chart Repository 列表

1 | $ helm repo list |

查看所有命名空间的 Release

1 | $ helm list --all-namespaces |

先更新依赖(Subcharts),再升级应用 –

helm dependency build

1 | $ helm upgrade <release> <chart> --dependency-update |

回滚应用,只能在

Manifests层级,而非业务层级

1 | helm rollback <release> <revision> |

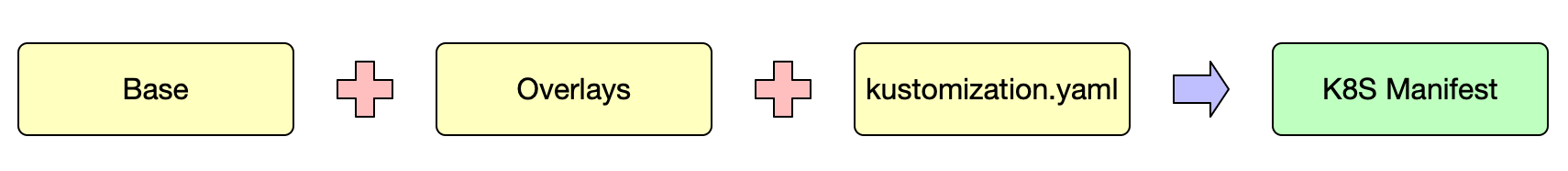

Kustomize

概述

增强版 YAML,类似于

声明式版本的yq

Kubernetes native configuration management

- Kustomize 是一个

CLI工具 - 可以对

Manifests的任何字段进行覆写 - 适用于

多环境的场景 - 由 K8S 团队开发,并内置到

kubectl

base - 基础目录,不同环境的

通用的 Manifest

1 | $ tree . |

1 | apiVersion: kustomize.config.k8s.io/v1beta1 |

kustomization.yaml 常用配置

| Config | Desc |

|---|---|

| resources | 定义 Manifest 资源、文件、目录或者 URL |

| secretGenerator | 生成 Secret 对象 |

| configMapGenerator | 生成 ConfigMap 对象 |

| images | 覆写 image tag |

helmCharts |

定义依赖的 helm chart |

| patchesStrategicMerge | 覆写操作(任意字段),v1 版本将废弃,建议迁移至 patches |

渲染(类似于

helm template <dir>)

1 | $ kubectl kustomize ./overlays/dev |

部署

1 | $ kubectl kustomize ./overlays/dev | kubectl apply -f - |

引用 Helm Chart

1 | $ tree -L 5 |

kustomization.yaml

1 | apiVersion: kustomize.config.k8s.io/v1beta1 |

对比 Helm Chart

- 可以覆写

任何Manifest 字段和值 学习成本低- 在

多环境下,Base 和 Overlays 模式能够很好地实现Manifest的复用 - 可以从

ENV生成 ConfigMap、Secret 对象,避免凭据泄漏 - Helm 屏蔽应用细节,对终端用户友好;Kustomize 暴露所有 K8S API,对开发者友好

与 Helm Chart 混用

Helm Chart主要提供模板化和参数化的能力,而Kustomize可以覆写任意字段- 结合:在

Helm Hooks中集成 Kustomize

缺点

分发没有 Helm Chart 方便生态不如 Helm强依赖于目录结构