Daemon Pod 特征

运行在Kubernetes集群的每个Node 上

每个Node有且仅有一个 Daemon Pod

新Node加入Kubernetes集群,Daemon Pod会自动 在新Node上被创建

旧Node被删除后,上面的Daemon Pod也会被回收

场景

网络插件的Agent组件

存储插件的Agent组件

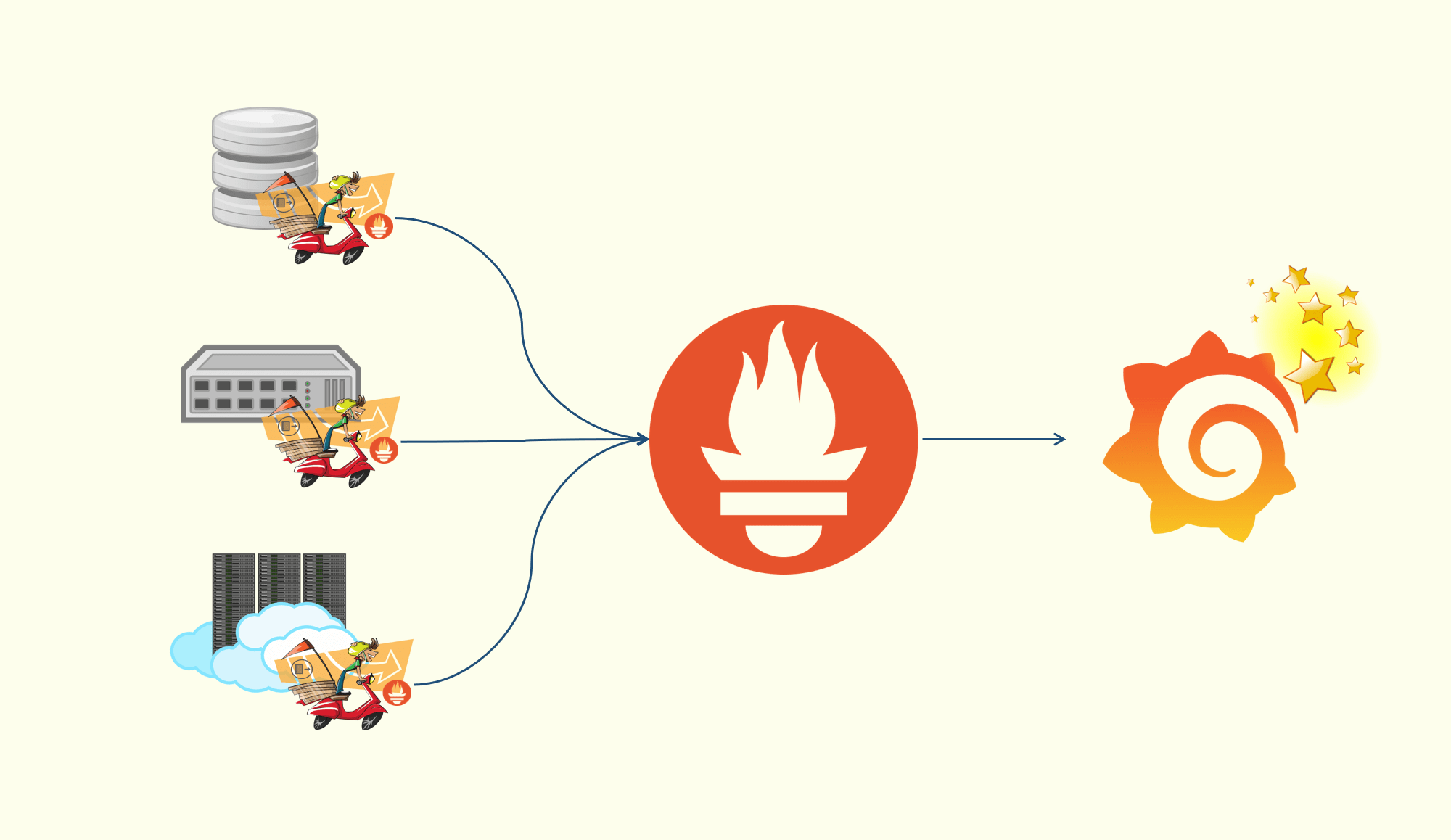

监控组件 + 日志组件

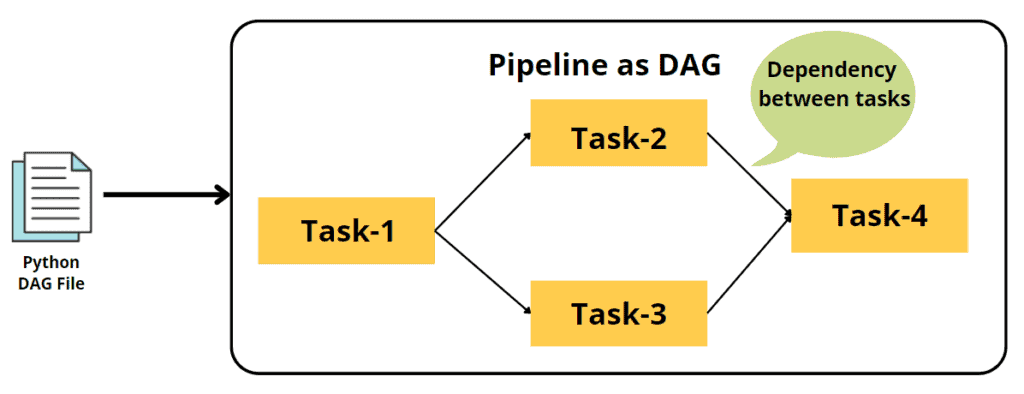

工作原理

循环控制:遍历所有Node ,根据Node上是否有被管理Pod的情况,来决定是否需要创建 或者删除 Pod

DaemonSet在创建每个Pod时,做了两件额外的事情

自动 给这个Pod加上一个nodeAffinity ,来保证该Pod只会在指定节点 上启动自动 给这个Pod加上一个Toleration ,从而忽略Node上的unschedulable污点

API Object

该DaemonSet管理的是一个fluentd-elasticsearch镜像的Pod

fluentd-elasticsearch:通过fluentd将Docker容器里的日志转发到ElasticSearch

DaemonSet与Deployment非常类似,只是DaemonSet没有replicas字段

fluentd-elasticsearch.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-elasticsearch namespace: kube-system labels: k8s-app: fluentd-logging spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch spec: tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule containers: - name: fluentd-elasticsearch image: 'k8s.gcr.io/fluentd-elasticsearch:1.20' resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers

Node : Daemon Pod = 1 : 1

DaemonSet控制器,首先从Etcd 获取所有的Node列表

然后遍历所有的Node,检查当前的Node有是否携带name=fluentd-elasticsearch标签(spec.selector )的Pod在运行

检查结果

没有这种Pod,要在这个Node上创建 – 如何创建?

有这种Pod,但数量大于1,要在Node上删除多余的Pod – 调用Kubernetes API即可

有这种Pod,数量等于1,Node正常

Affinity

spec.affinity:与调度 相关

nodeAffinity

requiredDuringSchedulingIgnoredDuringExecution:该nodeAffinity在每次调度 的时候都予以考虑

该Pod将来只允许运行在metadata.name=node-geektime的Node上

DaemonSet控制器在创建Pod 时,自动 为这个Pod对象加上nodeAffinity定义

需要绑定的Node名称 ,就是当前正在遍历 的Node

DaemonSet并不需要修改用户提交的YAML文件里的Pod模板

而是在向Kubernetes发起请求之前 ,直接修改根据模板生成的Pod对象

1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: v1 kind: Pod metadata: name: with-node-affinity spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: metadata.name operator: In values: - node-geektime

Toleration

DaemonSet还会给Daemon Pod自动 添加另一个与调度 相关的字段 – tolerations

含义:Daemon Pod会容忍 (Toleration )某些Node的污点 (Taint ,是一种特殊的Label)

容忍所有被标记为unschedulable 污点的Node,容忍的效果是允许调度

在正常情况下,被标记了unschedulable 污点的Node,是不会有任何Pod被调度上去 的(effect: NoSchedule)

DaemonSet自动给被管理的Pod加上这个特殊的Toleration,使得Daemon Pod可以忽略这个限制

继而保证每个Node都会被调度一个Daemon Pod(如果Node有故障,Daemon Pod会启动失败,DaemonSet会重试 )

1 2 3 4 5 6 7 8 9 apiVersion: v1 kind: Pod metadata: name: with-toleration spec: tolerations: - key: node.kubernetes.io/unschedulable operator: Exists effect: NoSchedule

当一个Node的网络插件尚未安装时,该Node会被自动加上node.kubernetes.io/network-unavailable污点

如果DaemonSet管理的是网络插件的Agent Pod,对应的Pod模板需要容忍node.kubernetes.io/network-unavailable污点

通过Toleration ,调度器在调度该Daemon Pod时会忽略 当前Node上的Taint ,进而可以成功地调度到当前Node上

1 2 3 4 5 6 7 8 9 10 ... template: metadata: labels: name: network-plugin-agent spec: tolerations: - key: node.kubernetes.io/network-unavailable operator: Exists effect: NoSchedule

可以在DaemonSet的Pod模板 里添加更多的Toleration

默认情况下Kubernetes集群不允许用户在Master节点部署Pod,Master节点默认携带node-role.kubernetes.io/master 污点

1 2 3 tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule

实践 创建DaemonSet DaemonSet一般都会加上resources 字段,用于限制CPU和内存的使用,防止DaemonSet占用过多的宿主机 资源

1 2 # kubectl apply -f fluentd-elasticsearch.yaml daemonset.apps/fluentd-elasticsearch created

查看Daemon Pod 有N个节点,就会有N个fluentd-elasticsearch Pod在运行

1 2 3 4 5 # kubectl get pod -n kube-system -l name=fluentd-elasticsearch NAME READY STATUS RESTARTS AGE fluentd-elasticsearch-c9rtj 1/1 Running 0 4m44s fluentd-elasticsearch-nlnjh 1/1 Running 0 4m44s fluentd-elasticsearch-ph2ld 1/1 Running 0 4m44s

查看DaemonSet

简写:DaemonSet(ds )、Deployment(deploy )

DaemonSet与Deployment类似,有DESIRED、CURRENT等多个状态字段,同样支持版本管理

1 2 3 # kubectl get ds -n kube-system fluentd-elasticsearch NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE fluentd-elasticsearch 3 3 3 3 3 <none> 5m2s

1 2 3 4 # kubectl rollout history daemonset fluentd-elasticsearch -n kube-system daemonset.apps/fluentd-elasticsearch REVISION CHANGE-CAUSE 1 <none>

升级版本 --record:自动记录到DaemonSet的rollout history

1 2 # kubectl set image ds/fluentd-elasticsearch fluentd-elasticsearch=k8s.gcr.io/fluentd-elasticsearch:v2.2.0 --record -n=kube-system daemonset.apps/fluentd-elasticsearch image updated

滚动更新过程

1 2 3 4 5 6 7 # kubectl rollout status ds/fluentd-elasticsearch -n kube-system Waiting for daemon set "fluentd-elasticsearch" rollout to finish: 1 out of 3 new pods have been updated... Waiting for daemon set "fluentd-elasticsearch" rollout to finish: 2 out of 3 new pods have been updated... Waiting for daemon set "fluentd-elasticsearch" rollout to finish: 2 out of 3 new pods have been updated... Waiting for daemon set "fluentd-elasticsearch" rollout to finish: 2 out of 3 new pods have been updated... Waiting for daemon set "fluentd-elasticsearch" rollout to finish: 2 of 3 updated pods are available... daemon set "fluentd-elasticsearch" successfully rolled out

rollout history

1 2 3 4 5 # kubectl rollout history daemonset fluentd-elasticsearch -n kube-system daemonset.apps/fluentd-elasticsearch REVISION CHANGE-CAUSE 1 <none> 2 kubectl set image ds/fluentd-elasticsearch fluentd-elasticsearch=k8s.gcr.io/fluentd-elasticsearch:v2.2.0 --record=true --namespace=kube-system

版本回滚

Deployment :一个版本对应一个ReplicaSet 对象DaemonSet控制器直接操作的是Pod:ControllerRevision (通用 的版本管理对象)

1 2 3 4 # kubectl get controllerrevision -n kube-system -l name=fluentd-elasticsearch NAME CONTROLLER REVISION AGE fluentd-elasticsearch-7464ccb7c daemonset.apps/fluentd-elasticsearch 2 7m34s fluentd-elasticsearch-76fd8fd678 daemonset.apps/fluentd-elasticsearch 1 16m

Data :保存了该版本对应的完整的DaemonSet对象 Annotations :保存了创建该ControllerRevision对象所使用的命令

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 # kubectl describe controllerrevision fluentd-elasticsearch-7464ccb7c -n kube-system Name: fluentd-elasticsearch-7464ccb7c Namespace: kube-system Labels: controller-revision-hash=7464ccb7c name=fluentd-elasticsearch Annotations: deprecated.daemonset.template.generation: 2 kubernetes.io/change-cause: kubectl set image ds/fluentd-elasticsearch fluentd-elasticsearch=k8s.gcr.io/fluentd-elasticsearch:v2.2.0 --record=true --namespace=kube-sy... API Version: apps/v1 Data: Spec: Template: $patch: replace Metadata: Creation Timestamp: <nil> Labels: Name: fluentd-elasticsearch Spec: Containers: Image: k8s.gcr.io/fluentd-elasticsearch:v2.2.0 Image Pull Policy: IfNotPresent Name: fluentd-elasticsearch Resources: Limits: Memory: 200Mi Requests: Cpu: 100m Memory: 200Mi Termination Message Path: /dev/termination-log Termination Message Policy: File Volume Mounts: Mount Path: /var/log Name: varlog Mount Path: /var/lib/docker/containers Name: varlibdockercontainers Read Only: true Dns Policy: ClusterFirst Restart Policy: Always Scheduler Name: default-scheduler Security Context: Termination Grace Period Seconds: 30 Tolerations: Effect: NoSchedule Key: node-role.kubernetes.io/master Volumes: Host Path: Path: /var/log Type: Name: varlog Host Path: Path: /var/lib/docker/containers Type: Name: varlibdockercontainers Kind: ControllerRevision Metadata: Creation Timestamp: 2021-06-18T08:56:08Z Managed Fields: API Version: apps/v1 Fields Type: FieldsV1 fieldsV1: f:data: f:metadata: f:annotations: .: f:deprecated.daemonset.template.generation: f:kubectl.kubernetes.io/last-applied-configuration: f:kubernetes.io/change-cause: f:labels: .: f:controller-revision-hash: f:name: f:ownerReferences: .: k:{"uid":"b0769f40-8fc5-4e23-97be-f235f6981320"}: .: f:apiVersion: f:blockOwnerDeletion: f:controller: f:kind: f:name: f:uid: f:revision: Manager: kube-controller-manager Operation: Update Time: 2021-06-18T08:56:08Z Owner References: API Version: apps/v1 Block Owner Deletion: true Controller: true Kind: DaemonSet Name: fluentd-elasticsearch UID: b0769f40-8fc5-4e23-97be-f235f6981320 Resource Version: 167173 UID: e85e6763-79e1-45ac-9da1-81a9922c395f Revision: 2 Events: <none>

将DaemonSet回滚到Revision=1 的状态:读取Revision=1 的ControllerRevision对象保存的Data 字段(完整的DaemonSet对象)

等价于:kubectl apply -f 旧DaemonSet对象,创建出Revision=3 的ControllerRevision对象

1 2 # kubectl rollout undo daemonset fluentd-elasticsearch --to-revision=1 -n kube-system daemonset.apps/fluentd-elasticsearch rolled back

1 2 3 4 5 6 7 8 9 10 # kubectl rollout history daemonset fluentd-elasticsearch -n kube-system daemonset.apps/fluentd-elasticsearch REVISION CHANGE-CAUSE 2 kubectl set image ds/fluentd-elasticsearch fluentd-elasticsearch=k8s.gcr.io/fluentd-elasticsearch:v2.2.0 --record=true --namespace=kube-system 3 <none> # kubectl get controllerrevision -n kube-system -l name=fluentd-elasticsearch NAME CONTROLLER REVISION AGE fluentd-elasticsearch-7464ccb7c daemonset.apps/fluentd-elasticsearch 2 17m fluentd-elasticsearch-76fd8fd678 daemonset.apps/fluentd-elasticsearch 3 26m

参考资料

深入剖析Kubernetes